-

Notifications

You must be signed in to change notification settings - Fork 106

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

maestro Florence-2 fine-tuning #33

Changes from 30 commits

a494bd2

b3eee67

8a0713e

2d6e4cf

aded4dd

263b7fe

f9abed8

c65fc44

0c83e90

9fbfc10

453d4d1

45a04da

13d9d36

e4e9fee

785014c

bf2b3e0

d21333e

b870667

d4bb6f9

4a24e97

44a2d0b

5048079

7c62cae

783dc88

dbe7593

2fd4d7d

6ff1926

47aef06

aa0708c

50b4876

36251e5

5f025af

166f4ec

7ab38eb

e0eca6b

8219f5f

1b4a224

ee1a6fa

9e52912

4c3fbd0

ad6e6c9

3580ec8

4543159

3fe24ce

e1554e7

5b42aec

40a8bfa

1db75ad

bc0aff5

2498de9

d363c66

9aedb29

bb44d11

ec2a324

2723987

27c3cfd

758c72a

3e00b40

dad39ba

672f27e

4a339a4

c7c63b7

5cc4220

518323c

fb212ea

f15b7a9

566d9ca

fb1c826

d556a88

f46049e

a2850ac

278918c

d614d25

5aba660

50751d5

3a82c11

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,21 @@ | ||

| repos: | ||

| - repo: https://github.com/pre-commit/pre-commit-hooks | ||

| rev: v2.3.0 | ||

| hooks: | ||

| - id: check-yaml | ||

| - id: end-of-file-fixer | ||

| - id: trailing-whitespace | ||

| - repo: https://github.com/psf/black | ||

| rev: 24.8.0 | ||

| hooks: | ||

| - id: black | ||

| args: [--line-length=120] | ||

| - repo: https://github.com/pre-commit/mirrors-mypy | ||

| rev: v1.11.2 | ||

| hooks: | ||

| - id: mypy | ||

| - repo: https://github.com/PyCQA/flake8 | ||

| rev: 7.1.1 | ||

| hooks: | ||

| - id: flake8 | ||

| args: [--max-line-length=120] |

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -1,142 +1,35 @@ | ||

|

|

||

| <div align="center"> | ||

|

|

||

| <h1>multimodal-maestro</h1> | ||

| <h1>maestro</h1> | ||

|

|

||

| <br> | ||

|

|

||

| [](https://badge.fury.io/py/maestro) | ||

| [](https://github.com/roboflow/multimodal-maestro/blob/main/LICENSE) | ||

| [](https://badge.fury.io/py/maestro) | ||

| [](https://huggingface.co/spaces/Roboflow/SoM) | ||

| [](https://colab.research.google.com/github/roboflow/multimodal-maestro/blob/develop/cookbooks/multimodal_maestro_gpt_4_vision.ipynb) | ||

| <p>coming: when it's ready...</p> | ||

|

|

||

| </div> | ||

|

|

||

| ## 👋 hello | ||

|

|

||

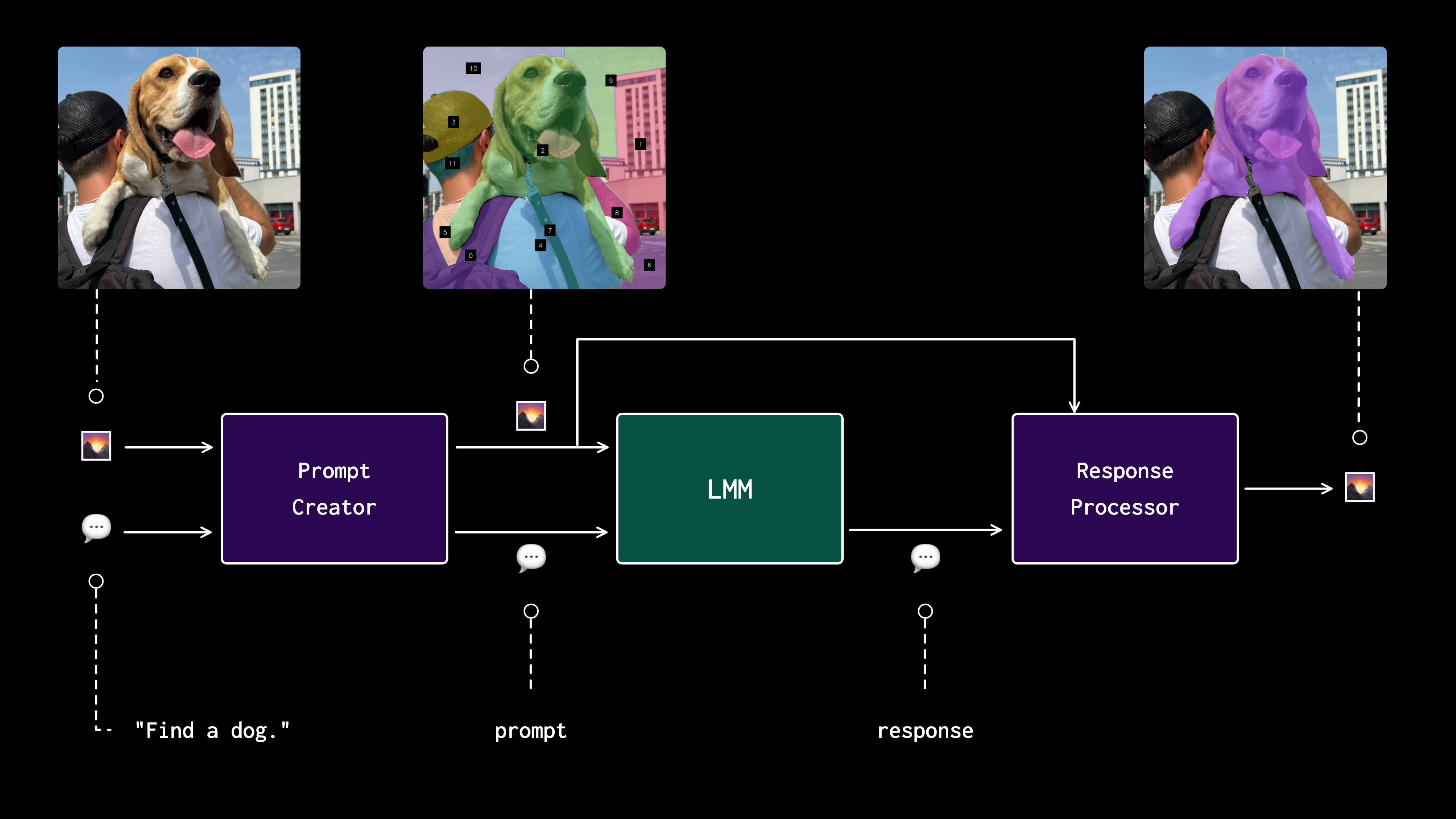

| Multimodal-Maestro gives you more control over large multimodal models to get the | ||

| outputs you want. With more effective prompting tactics, you can get multimodal models | ||

| to do tasks you didn't know (or think!) were possible. Curious how it works? Try our | ||

| [HF space](https://huggingface.co/spaces/Roboflow/SoM)! | ||

| **maestro** is a tool designed to streamline and accelerate the fine-tuning process for | ||

| multimodal models. It provides ready-to-use recipes for fine-tuning popular | ||

| vision-language models (VLMs) such as **Florence-2**, **PaliGemma**, and | ||

| **Phi-3.5 Vision** on downstream vision-language tasks. | ||

|

|

||

| ## 💻 install | ||

|

|

||

| ⚠️ Our package has been renamed to `maestro`. Install the package in a | ||

| [**3.11>=Python>=3.8**](https://www.python.org/) environment. | ||

| Pip install the supervision package in a | ||

| [**Python>=3.8**](https://www.python.org/) environment. | ||

|

|

||

| ```bash | ||

| pip install maestro | ||

| ``` | ||

|

|

||

| ## 🔌 API | ||

|

|

||

| 🚧 The project is still under construction. The redesigned API is coming soon. | ||

|

|

||

|  | ||

|

|

||

| ## 🧑🍳 prompting cookbooks | ||

|

|

||

| | Description | Colab | | ||

| |:----------------------------------------------------------------|:-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------:| | ||

| | Prompt LMMs with Multimodal Maestro | [](https://colab.research.google.com/github/roboflow/multimodal-maestro/blob/develop/cookbooks/multimodal_maestro_gpt_4_vision.ipynb) | | ||

| | Manually annotate ONE image and let GPT-4V annotate ALL of them | [](https://colab.research.google.com/github/roboflow/multimodal-maestro/blob/develop/cookbooks/grounding_dino_and_gpt4_vision.ipynb) | | ||

|

|

||

|

|

||

| ## 🚀 example | ||

|

|

||

| ``` | ||

| Find dog. | ||

|

|

||

| >>> The dog is prominently featured in the center of the image with the label [9]. | ||

| ``` | ||

|

|

||

| <details close> | ||

| <summary>👉 read more</summary> | ||

|

|

||

| <br> | ||

|

|

||

| - **load image** | ||

|

|

||

| ```python | ||

| import cv2 | ||

|

|

||

| image = cv2.imread("...") | ||

| ``` | ||

|

|

||

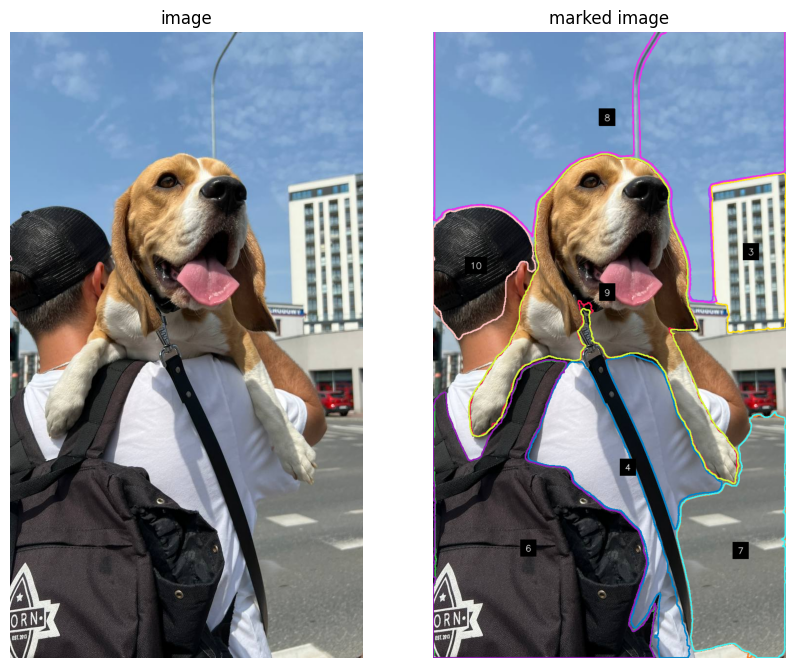

| - **create and refine marks** | ||

|

|

||

| ```python | ||

| import maestro | ||

|

|

||

| generator = maestro.SegmentAnythingMarkGenerator(device='cuda') | ||

| marks = generator.generate(image=image) | ||

| marks = maestro.refine_marks(marks=marks) | ||

| ``` | ||

|

|

||

| - **visualize marks** | ||

|

|

||

| ```python | ||

| mark_visualizer = maestro.MarkVisualizer() | ||

| marked_image = mark_visualizer.visualize(image=image, marks=marks) | ||

| ``` | ||

|  | ||

|

|

||

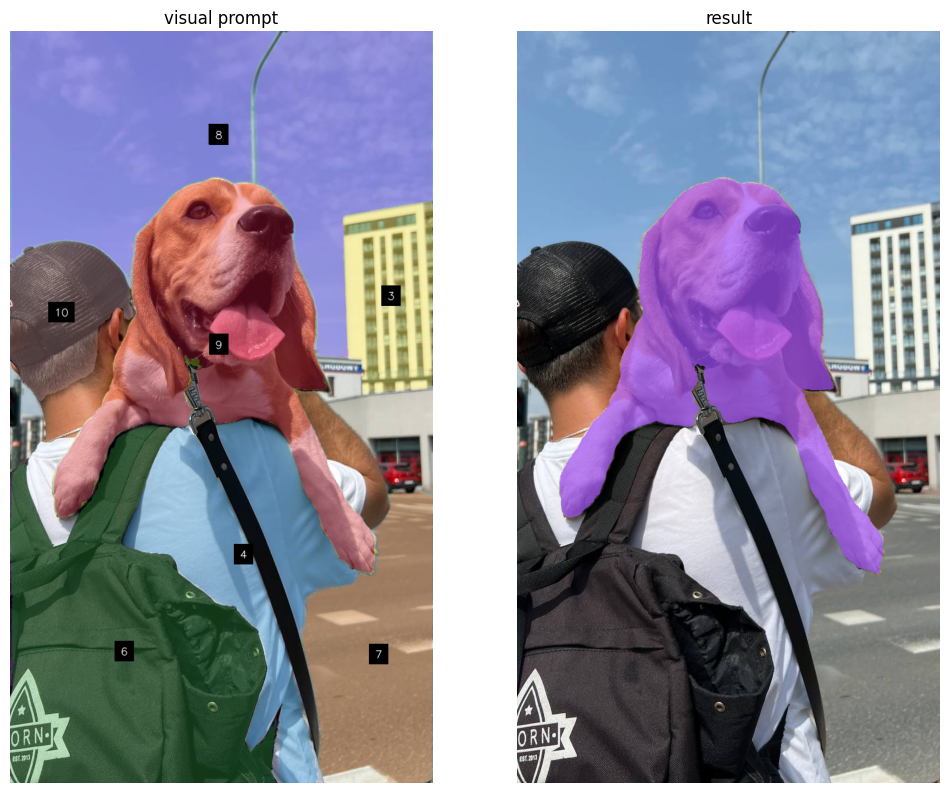

| - **prompt** | ||

|

|

||

| ```python | ||

| prompt = "Find dog." | ||

|

|

||

| response = maestro.prompt_image(api_key=api_key, image=marked_image, prompt=prompt) | ||

| ``` | ||

|

|

||

| ``` | ||

| >>> "The dog is prominently featured in the center of the image with the label [9]." | ||

| ``` | ||

|

|

||

| - **extract related marks** | ||

|

|

||

| ```python | ||

| masks = maestro.extract_relevant_masks(text=response, detections=refined_marks) | ||

| ``` | ||

|

|

||

| ``` | ||

| >>> {'6': array([ | ||

| ... [False, False, False, ..., False, False, False], | ||

| ... [False, False, False, ..., False, False, False], | ||

| ... [False, False, False, ..., False, False, False], | ||

| ... ..., | ||

| ... [ True, True, True, ..., False, False, False], | ||

| ... [ True, True, True, ..., False, False, False], | ||

| ... [ True, True, True, ..., False, False, False]]) | ||

| ... } | ||

| ``` | ||

|

|

||

| </details> | ||

|

|

||

|  | ||

| Documentation and Florence-2 fine-tuning examples for object detection and VQA coming | ||

| soon. | ||

|

|

||

| ## 🚧 roadmap | ||

|

|

||

| - [ ] Rewriting the `maestro` API. | ||

| - [ ] Update [HF space](https://huggingface.co/spaces/Roboflow/SoM). | ||

| - [ ] Documentation page. | ||

| - [ ] Add GroundingDINO prompting strategy. | ||

| - [ ] CovVLM demo. | ||

| - [ ] Qwen-VL demo. | ||

|

|

||

| ## 💜 acknowledgement | ||

|

|

||

| - [Set-of-Mark Prompting Unleashes Extraordinary Visual Grounding | ||

| in GPT-4V](https://arxiv.org/abs/2310.11441) by Jianwei Yang, Hao Zhang, Feng Li, Xueyan | ||

| Zou, Chunyuan Li, Jianfeng Gao. | ||

| - [The Dawn of LMMs: Preliminary Explorations with GPT-4V(ision)](https://arxiv.org/abs/2309.17421) | ||

| by Zhengyuan Yang, Linjie Li, Kevin Lin, Jianfeng Wang, Chung-Ching Lin, Zicheng Liu, | ||

| Lijuan Wang | ||

|

|

||

| ## 🦸 contribution | ||

|

|

||

| We would love your help in making this repository even better! If you noticed any bug, | ||

| or if you have any suggestions for improvement, feel free to open an | ||

| [issue](https://github.com/roboflow/multimodal-maestro/issues) or submit a | ||

| [pull request](https://github.com/roboflow/multimodal-maestro/pulls). | ||

| - [ ] Release a CLI for predefined fine-tuning recipes. | ||

| - [ ] Multi-GPU fine-tuning support. | ||

| - [ ] Allow multi-dataset fine-tuning and support multiple tasks at the same time. |

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,5 @@ | ||

| SEED_ENV = "SEED" | ||

| DEFAULT_SEED = "42" | ||

| CUDA_DEVICE_ENV = "CUDA_DEVICE" | ||

| DEFAULT_CUDA_DEVICE = "cuda:0" | ||

| HF_TOKEN_ENV = "HF_TOKEN" |

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,50 @@ | ||

| import json | ||

| import os | ||

| from typing import List, Dict, Any, Tuple | ||

|

|

||

| from PIL import Image | ||

| from transformers.pipelines.base import Dataset | ||

|

|

||

|

|

||

| class JSONLDataset: | ||

| def __init__(self, jsonl_file_path: str, image_directory_path: str): | ||

| self.jsonl_file_path = jsonl_file_path | ||

| self.image_directory_path = image_directory_path | ||

| self.entries = self._load_entries() | ||

|

|

||

| def _load_entries(self) -> List[Dict[str, Any]]: | ||

| entries = [] | ||

| with open(self.jsonl_file_path, "r") as file: | ||

| for line in file: | ||

| data = json.loads(line) | ||

| entries.append(data) | ||

| return entries | ||

|

|

||

| def __len__(self) -> int: | ||

| return len(self.entries) | ||

|

|

||

| def __getitem__(self, idx: int) -> Tuple[Image.Image, Dict[str, Any]]: | ||

| if idx < 0 or idx >= len(self.entries): | ||

| raise IndexError("Index out of range") | ||

|

|

||

| entry = self.entries[idx] | ||

| image_path = os.path.join(self.image_directory_path, entry["image"]) | ||

| try: | ||

| image = Image.open(image_path) | ||

| return (image, entry) | ||

| except FileNotFoundError: | ||

| raise FileNotFoundError(f"Image file {image_path} not found.") | ||

|

|

||

|

|

||

| class DetectionDataset(Dataset): | ||

| def __init__(self, jsonl_file_path: str, image_directory_path: str): | ||

| self.dataset = JSONLDataset(jsonl_file_path, image_directory_path) | ||

|

|

||

| def __len__(self): | ||

| return len(self.dataset) | ||

|

|

||

| def __getitem__(self, idx): | ||

| image, data = self.dataset[idx] | ||

| prefix = data["prefix"] | ||

| suffix = data["suffix"] | ||

| return prefix, suffix, image | ||

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,31 @@ | ||

| from __future__ import annotations | ||

|

|

||

| import random | ||

| from typing import List | ||

|

|

||

| from torch.utils.data import Dataset | ||

|

|

||

| from maestro.trainer.common.utils.file_system import read_jsonl | ||

|

|

||

|

|

||

| class JSONLDataset(Dataset): | ||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. I'm pretty sure we do not need There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. It also feels like There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. 👍 |

||

| # TODO: implementation could be better - avoiding loading | ||

| # whole files to memory | ||

|

|

||

| @classmethod | ||

| def from_jsonl_file(cls, path: str) -> JSONLDataset: | ||

| file_content = read_jsonl(path=path) | ||

| random.shuffle(file_content) | ||

| return cls(jsons=file_content) | ||

|

|

||

| def __init__(self, jsons: List[dict]): | ||

| self.jsons = jsons | ||

|

|

||

| def __getitem__(self, index): | ||

| return self.jsons[index] | ||

|

|

||

| def __len__(self): | ||

| return len(self.jsons) | ||

|

|

||

| def shuffle(self): | ||

| random.shuffle(self.jsons) | ||

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,40 @@ | ||

| import json | ||

| import os | ||

| from typing import Union, List | ||

|

|

||

|

|

||

| def read_jsonl(path: str) -> List[dict]: | ||

| file_lines = read_file( | ||

| path=path, | ||

| split_lines=True, | ||

| ) | ||

| return [json.loads(line) for line in file_lines] | ||

|

|

||

|

|

||

| def read_file( | ||

| path: str, | ||

| split_lines: bool = False, | ||

| strip_white_spaces: bool = False, | ||

| line_separator: str = "\n", | ||

| ) -> Union[str, List[str]]: | ||

| with open(path, "r") as f: | ||

| file_content = f.read() | ||

| if strip_white_spaces: | ||

| file_content = file_content.strip() | ||

| if not split_lines: | ||

| return file_content | ||

| lines = file_content.split(line_separator) | ||

| if not strip_white_spaces: | ||

| return lines | ||

| return [line.strip() for line in lines] | ||

|

|

||

|

|

||

| def save_json(path: str, content: dict) -> None: | ||

| ensure_parent_dir_exists(path=path) | ||

| with open(path, "w") as f: | ||

| json.dump(content, f, indent=4) | ||

|

|

||

|

|

||

| def ensure_parent_dir_exists(path: str) -> None: | ||

| parent_dir = os.path.dirname(os.path.abspath(path)) | ||

| os.makedirs(parent_dir, exist_ok=True) |

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,42 @@ | ||

| from typing import Dict, Tuple, Optional | ||

|

|

||

|

|

||

| class CheckpointsLeaderboard: | ||

|

|

||

| def __init__( | ||

| self, | ||

| max_checkpoints: int, | ||

| ): | ||

| self._max_checkpoints = max(max_checkpoints, 1) | ||

| self._leaderboard: Dict[int, Tuple[str, float]] = {} | ||

|

|

||

| def register_checkpoint(self, epoch: int, path: str, loss: float) -> Tuple[bool, Optional[str]]: | ||

| if len(self._leaderboard) < self._max_checkpoints: | ||

| self._leaderboard[epoch] = (path, loss) | ||

| return True, None | ||

| max_loss_key, max_loss_in_leaderboard = None, None | ||

| for key, (_, loss) in self._leaderboard.items(): | ||

| if max_loss_in_leaderboard is None: | ||

| max_loss_key = key | ||

| max_loss_in_leaderboard = loss | ||

| if loss > max_loss_in_leaderboard: # type: ignore | ||

| max_loss_key = key | ||

| max_loss_in_leaderboard = loss | ||

| if loss >= max_loss_in_leaderboard: # type: ignore | ||

| return False, None | ||

| to_be_removed, _ = self._leaderboard.pop(max_loss_key) # type: ignore | ||

| self._leaderboard[epoch] = (path, loss) | ||

| return True, to_be_removed | ||

|

|

||

| def get_best_model(self) -> str: | ||

| min_loss_key, min_loss_in_leaderboard = None, None | ||

| for key, (_, loss) in self._leaderboard.items(): | ||

| if min_loss_in_leaderboard is None: | ||

| min_loss_key = key | ||

| min_loss_in_leaderboard = loss | ||

| if loss < min_loss_in_leaderboard: # type: ignore | ||

| min_loss_key = key | ||

| min_loss_in_leaderboard = loss | ||

| if min_loss_key is None: | ||

| raise RuntimeError("Could not retrieve best model") | ||

| return self._leaderboard[min_loss_key][0] |

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,28 @@ | ||

| from __future__ import annotations | ||

|

|

||

| from typing import Dict, Tuple, List | ||

|

|

||

|

|

||

| class MetricsTracker: | ||

|

|

||

| @classmethod | ||

| def init(cls, metrics: List[str]) -> MetricsTracker: | ||

| return cls(metrics={metric: [] for metric in metrics}) | ||

|

|

||

| def __init__(self, metrics: Dict[str, List[Tuple[int, int, float]]]): | ||

| self._metrics = metrics | ||

|

|

||

| def register(self, metric: str, epoch: int, step: int, value: float) -> None: | ||

| self._metrics[metric].append((epoch, step, value)) | ||

|

|

||

| def describe_metrics(self) -> List[str]: | ||

| return list(self._metrics.keys()) | ||

|

|

||

| def get_metric_values( | ||

| self, | ||

| metric: str, | ||

| with_index: bool = True, | ||

| ) -> list: | ||

| if with_index: | ||

| return self._metrics[metric] | ||

| return [value[2] for value in self._metrics[metric]] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think it is more Florence-2 Dataset than Detection Dataset. I assume we won't change that structure for other tasks?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yeah, let's stick to model-datasets for now