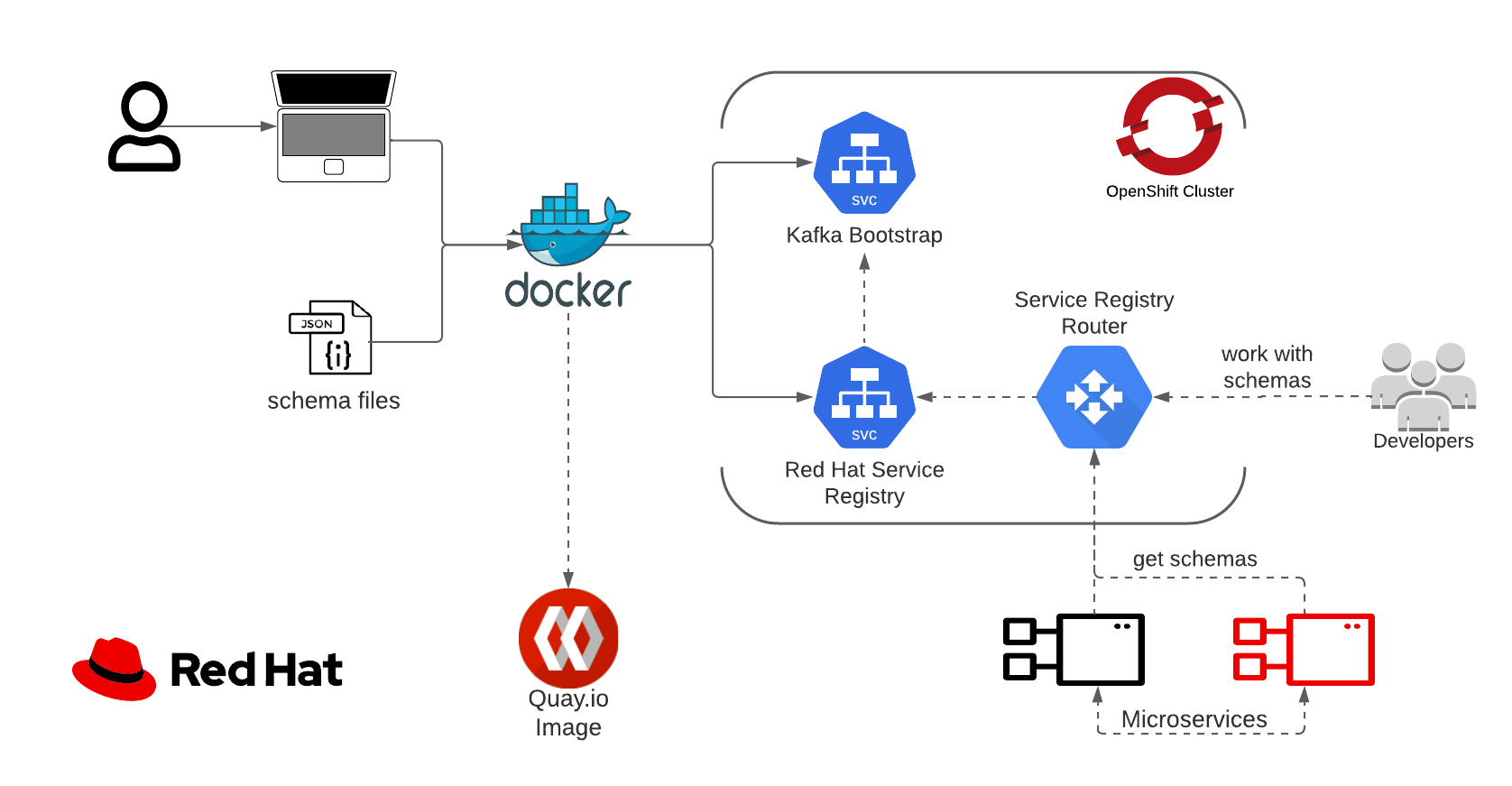

Red Hat's Service Registry, part of Red Hat's Integration services portfolio,

is a Kafka based schema database.

Used for sharing and reusing data structures between developers and services.

An ideal solution for a complex microservices-based environment.

And if you're in for the full OpenShift experience,

AMQ which is also part of Red Hat's Integration services portfolio,

got your underlying Kafka deployment covered with AMQ Streams.

This containerized application helps you easily migrate your various data structures schemas to

Red Hat's Service Registry.

Simply invoke with a list of topics and schemas, and get back to work.

😁

docker run --network host --rm -it \

quay.io/ecosystem-appeng/schema-pusher:latest \

--bootstrap https://<kafka-bootstrap-route-url-goes-here>:443 \

--registry http://<service-registry-route-url-goes-here> \

--strategy topic_record \

--topic sometopic --schema $(base64 -w 0 my_schema.avsc) \

--topic someothertopic --schema $(base64 -w 0 my_other_schema.avsc) \

--propkey basic.auth.credentials.source --propvalue USER_INFO \

--propkey schema.registry.basic.auth.user.info --propvalue registry-user:changeme \

--truststore $(base64 -w 0 kafka_cluster_ca.p12) \

--truststorePassword $(cat kafka_cluster_ca.password) \

--keystore $(base64 -w 0 kafka_user.p12) \

--keystorePassword $(cat kafka_user.password)You can set custom producer properties using the optional repeatable --propkey and --propvalue.

Note that some keys will be overwritten by the application.

If you use a self-signed certificate for your kafka deployment,

you can use the optional --truststore and truststorePassword parameters to set

the pkcs12 truststore and related password of the kakfa cluster.

If your kafka deployment require authentication,

you can use the optional --keystore and --keystorePassword parameters to set

the pkcs12 keystore and related password of the kakfa user.

You can follow this and grab the relevant pkcs12 and password,

replace USER_SECRET_NAME with the user secret name,

repalce CLUSTER_CA_SECRET_NAME with the cluster ca name (usally ends with cluster-ca-cert):oc get secret CLUSTER_CA_SECRET_NAME -o jsonpath='{.data.ca\.p12}' | base64 -d > kafka_cluster_ca.p12 oc get secret CLUSTER_CA_SECRET_NAME -o jsonpath='{.data.ca\.password}' | base64 -d > kafka_cluster_ca.password oc get secret USER_SECRET_NAME -o jsonpath='{.data.user\.p12}' | base64 -d > kafka_user.p12 oc get secret USER_SECRET_NAME -o jsonpath='{.data.user\.password}' | base64 -d > kakfa_user.password

For help:

docker run --rm -it quay.io/ecosystem-appeng/schema-pusher:latest --helpprints:

Tool for decoding base64 schema files and producing Kafka messages for the specified topics.

The schema files will be pushed to Red Hat's service registry via the attached Java application.

------------------------------------------------------------------------------------------------

Usage: -h/--help

Usage: [options]

Options:

--bootstrap, (mandatory) kafka bootstrap url.

--registry, (mandatory) service registry url.

--strategy, (optional) subject naming strategy, [topic record topic_record] (default: topic_record).

--topic (mandatory), topic/s to push the schemas to (repeatable in correlation with schema).

--schema, (mandatory) base64 encoded schema file (repeatable in correlation with topic).

--propkey, (optional) a string key to set for the procucer (repeatable in correlation with propvalue).

--propValue, (optional) a string value to set for the procucer (repeatable in correlation with propkey).

--truststore, (optional) base64 encoded pkcs12 truststore for identifying the bootstrap (inclusive with truststorePassword).

--truststorePassword (optional) password for accessing the pkcs12 truststore (inclusive with truststore).

--keystore, (optional) base64 encoded pkcs12 keystore for identifying to the bootstrap (inclusive with keystorePassword).

--keystorePassword (optional) password for accessing the pkcs12 keystore (inclusive with keystore).

Example:

--bootstrap https://kafka-bootstrap-url:443 --registry http://service-registry-url:8080 \

--strategy topic_record \

--topic sometopic --schema $(base64 -w 0 my-schema.avsc) \

--topic someothertopic --schema $(base64 -w 0 my-other-schema.avsc) \

--propkey basic.auth.credentials.source --propvalue USER_INFO \

--propkey schema.registry.basic.auth.user.info --propvalue registry-user:changeme \

--truststore $(base64 -w 0 kafka_cluster_ca.p12) \

--truststorePassword secretTruststorePassword \

--keystore $(base64 -w 0 kafka_user_ca.p12) \

--keystorePassword secretKeystorePassword

This should result in each schema file being produced to its respective topic using the specified naming strategy.

The following producer property keys cannot be overwritten using the --propky and --propvalue parameters:

- bootstrap.servers

- schema.registry.url

- key.serializer

- value.serializer

- value.subject.name.strategy

- security.protocol

- ssl.truststore.location

- ssl.truststore.password

- ssl.truststore.type

- ssl.keystore.location

- ssl.keystore.password

- ssl.keystore.type

Currently, we support AVRO Schemas.

This application is constructed of three layers:

- A Java Application, a CLI application in charge of consuming schema files from a directory and producing Kafka

messages and service registry requests for each schema file.

Code pointers:- com/redhat/schema/pusher/PushCli.java is the CLI abstraction, and com/redhat/schema/pusher/avro/AvroPushCli.java it the implementation in charge of picking up the arguments and preparing them for the producer invocations.

- com/redhat/schema/pusher/SchemaPusher.java is the contract, and com/redhat/schema/pusher/avro/AvroSchemaPusher.java is the implementation in charge of pushing schema messages via the producer.

- com/redhat/schema/pusher/MainApp.java is the main application starting point, instantiating the di context and loading the command line.

- A Shell script is in charge of decoding, extracting, and invoking the Java application with the extracted content.

- A Dockerfile instruction set in charge of containerizing the Shell script and the Java application.

You can invoke the Java application directly,

keep in mind that the app takes a directory of schema files and not an encoded base64 value,

as well as the actual path of the pkcs12 files and literal passwords.

The Shell script is the component in charge of decoding and preparing the arguments for invoking the Java app.

Note that this form of execution requires an application build.

java -jar target/schema-pusher-jar-with-dependencies.jar \

-b=https://<kafka-bootstrap-route-url-goes-here>:443 \

-r=http://<service-registry-route-url-goes-here> \

-t=sometopic \

-s=src/test/resources/com/redhat/schema/pusher/avro/schemas/test_schema1.avsc \

-t=someothertopic \

-s=src/test/resources/com/redhat/schema/pusher/avro/schemas/test_schema2.avsc \

--pk=custom-propety-key \

--pv=custom-property-value \

--tf=certs/ca.p12 \

--tp=secretClusterCaPKCS12password \

--kf=certs/user.p12 \

--kp=secretUserPKCS12passwordNote that the topic_record strategy is the default one used if none is specified.

For help:

java -jar target/schema-pusher-jar-with-dependencies.jar --helpprints:

Usage: <main class> [-hV] -b=<kafkaBootstrap> [-n=<namingStrategy>]

-r=<serviceRegistry> (-t=<topic> -s=<schemaPath>)...

[--pk=<propertyKey> --pv=<propertyValue>]

[--tf=<truststoreFile> --tp=<truststorePassword>]

[--kf=<keystoreFile> --kp=<keystorePassword>]

Push schemas to Red Hat's Service Registry

-b, --bootstrap-url=<kafkaBootstrap>

The url for Kafka's bootstrap server.

-h, --help Show this help message and exit.

--kf, --keystore-file=<keystoreFile>

The path for the keystore pkcs12 file for use with the

Kafka producer

--kp, --keystore-password=<keystorePassword>

The password for the keystore pkcs12 file for use with

the Kafka producer

-n, --naming-strategy=<namingStrategy>

The subject naming strategy.

--pk, --property-key=<propertyKey>

Producer property key

--pv, --property-value=<propertyValue>

Producer property value

-r, --registry-url=<serviceRegistry>

The url for Red Hat's service registry.

-s, --schema-path=<schemaPath>

The schema path for the topic, correlated with a topic.

-t, --topic=<topic> The desired topic for the schema, correlated with a

schema path.

--tf, --truststore-file=<truststoreFile>

The path for the truststore pkcs12 file for use with

the Kafka producer

--tp, --truststore-password=<truststorePassword>

The password for the truststore pkcs12 file for use

with the Kafka producer

-V, --version Print version information and exit.

| Command | Description |

|---|---|

mvn package |

tests and builds the java application. |

mvn verify |

tests, build, verifies code formatting. |

mvn k8s:build |

builds the docker image. |

mvn k8s:push |

push the docker image to Quay.io. |

| Workflow | Trigger | Description |

|---|---|---|

| Pull Request | pull requests | build and verifies the project |

| Release | push semver tags to the main branch | create a github release for the triggering tag |

| Stage | manually : push to the main branch | build the project including the docker image |

-

Decide the desired version, i.e. 1.2.3 and version title, i.e. new version title.

Tip: the following command will predict the next

semverbased on git tags and conventional commits:docker run --rm -it -v $PWD:/usr/share/repo tomerfi/version-bumper:latest | cut -d ' ' -f 1 | xargs

-

Set the desired release version, add, commit, and tag it (do not use skip ci for this commit):

mvn versions:set -DnewVersion=1.2.3 git add pom.xml git commit -m "build: set release version to 1.2.3" git tag -m "new version title" 1.2.3

-

Build the project and push it to the image registry:

mvn package k8s:build k8s:push

-

Set the next development version iteration, add, commit, and push:

mvn versions:set -DnextSnapshot git add pom.xml git commit -m "build: set a new snapshot version [skip ci]" git push --follow-tags

The pushed tag will trigger the release.yml workflow,

this will automatically create a GitHub Release for the 1.2.3 tag with the title new version title.