-

Notifications

You must be signed in to change notification settings - Fork 157

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Question] Is it possible to run on the internal IMU only? #1

Comments

|

i have the similar query. |

|

Thank you for your interest in the work and all the enquiries. Here's the answer from our side:

I hope this can help you. Don't hesitate if you have any further questions in this regard. |

|

Thank you for your answer. However, I have a different opinion about the built-in IMU accelerometer being normalized to 1. What do you think about this? |

|

Hi, thanks for posting the graph. |

|

Thank you. I understand the problems with the internal IMU and the reasons for using an external IMU. I am going to try to work on this problem for a while. |

|

noticed kamibukuro5656 has closed this thread, yet world like to reopen it to further discuss about the suitability of 6-axis imu output for this repo. As we understand, the livox internal imu is 6-axis and its output contains accel and gyro values but no direct orientation elements; while the external xsens imu is 9-axis which outputs orientation value. Encouraged by KAMIBUKURO5656 that he could run the sample FR-IOSB-Long dataset with lili-om by adding imu filter to create orientation estimate, I did some similar test with the KA_Urban_East dataset, and here is the result I got,

So my question is, does a 6-axis imu output can satisfy the running requirement of this repo? if yes, how exactly? |

|

In principal, there's no need to even run a filter additionally. The 9-axis IMU information is read by the system as standard ros msg but the orientation is never used anywhere except for orientation initialization at the first frame. It just uses the orientation reading at the very first frame to transform the frame to the ENU coordinates. Other than that, it never uses any orientation readings (including raw measurements or anything from any filter). For doing the same thing, you can also average the accelerometer of the first several frames to initialize the orientation. The assumption here is that the sensor starts from static state (e.g., you put it on the ground or hold it still), so that the averaged acceleration can be regarded as the gravity. Such an orientation initialization is also applicable to our recorded data sets. In summary, the system only needs a proper orientation initialization (either of the two ways mentioned above) and 6-axis IMU readings. |

|

hi, kailaili, About the 2nd orientation initialization approach in your post, I do feel a bit curious, as by that, the z-axis will align to world U while x and y axis are not aligned to world E and N. Does it mean for initial orientation, z alignment is enough? Correct me if i am wrong. [was trying to read through the backendFusion src code and the paper, but still not fully understand it yet] |

|

Hi radeonwu There are a couple of things I can answer regarding your question. If there is any mistake in my understanding, please correct it. |

|

I also got the following answer from Livox about the unit of built-in IMU. The unit is G. |

|

Thanks kamibukuro5656 for the prompt reply. |

This is good update, and thanks for the sharing. |

you can locate it from 624th line in BackendFusion.cpp in the function imuHandler. |

As mentioned before, we also tried the internal IMU at the beginning but we've noticed that the internal one is not comparably good as the external Xsens MTi-670. Therefore, we've provided the external one. But definitely you can continue trying out with the internal one since the unit issue is clarified. |

Thank you for your update. The second initialization method that I mentioned above is basically what the madgwick does. A side note: orientations in lili-om are always represented by quaternions. So, if you want to have a "zero" orientation, it should be [1,0,0,0]^T. |

|

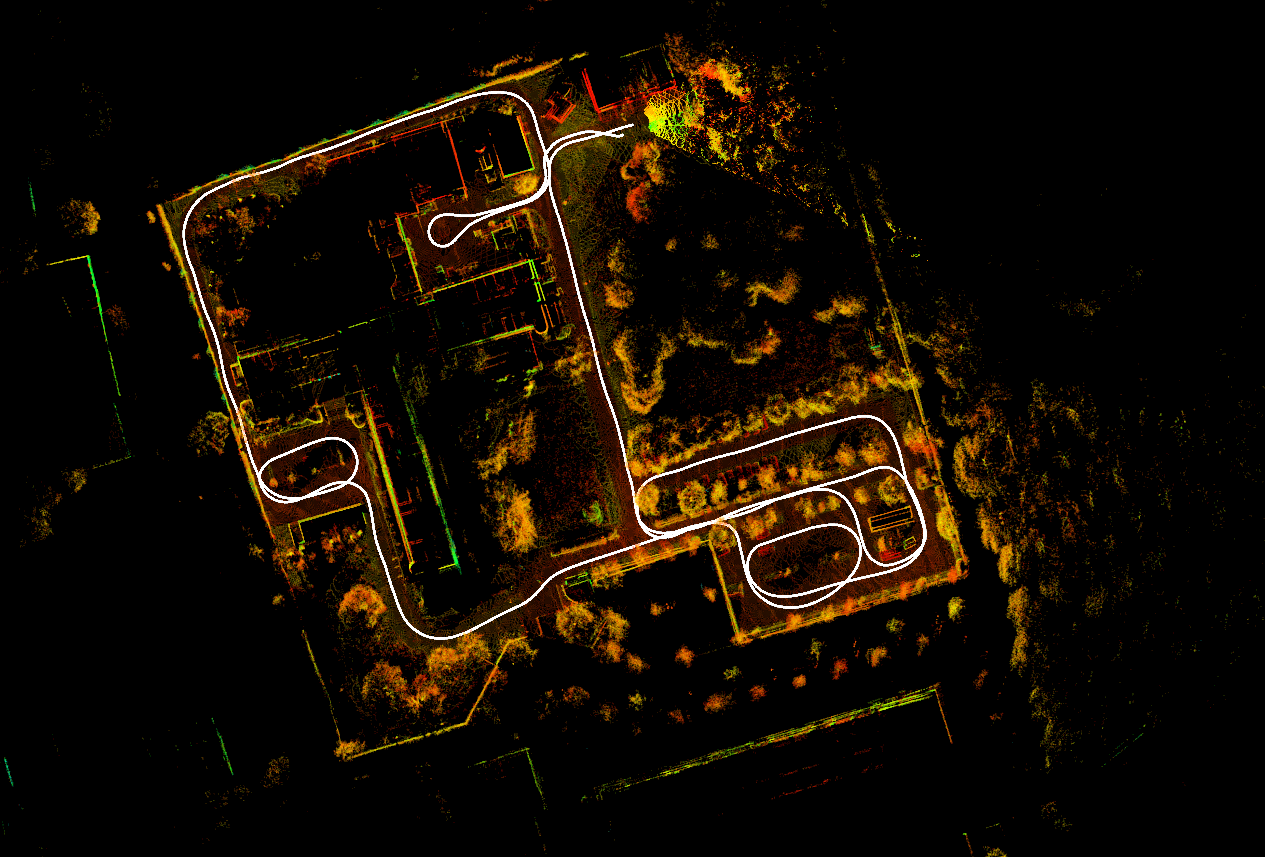

Hello. Finally, I was able to get good results with only the built-in IMU of Horizon. Finally, I'll summarize what I did to get these results.

Once again, thanks for publishing the great results. |

|

Hi, kamibukuro5656,

|

Happy to hear that! |

|

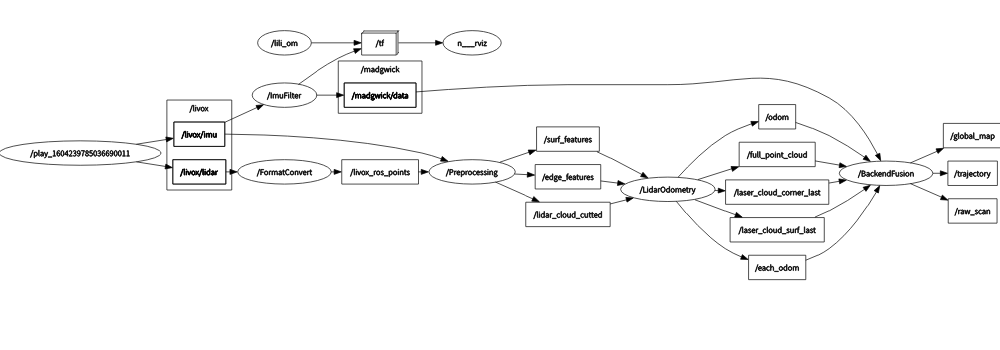

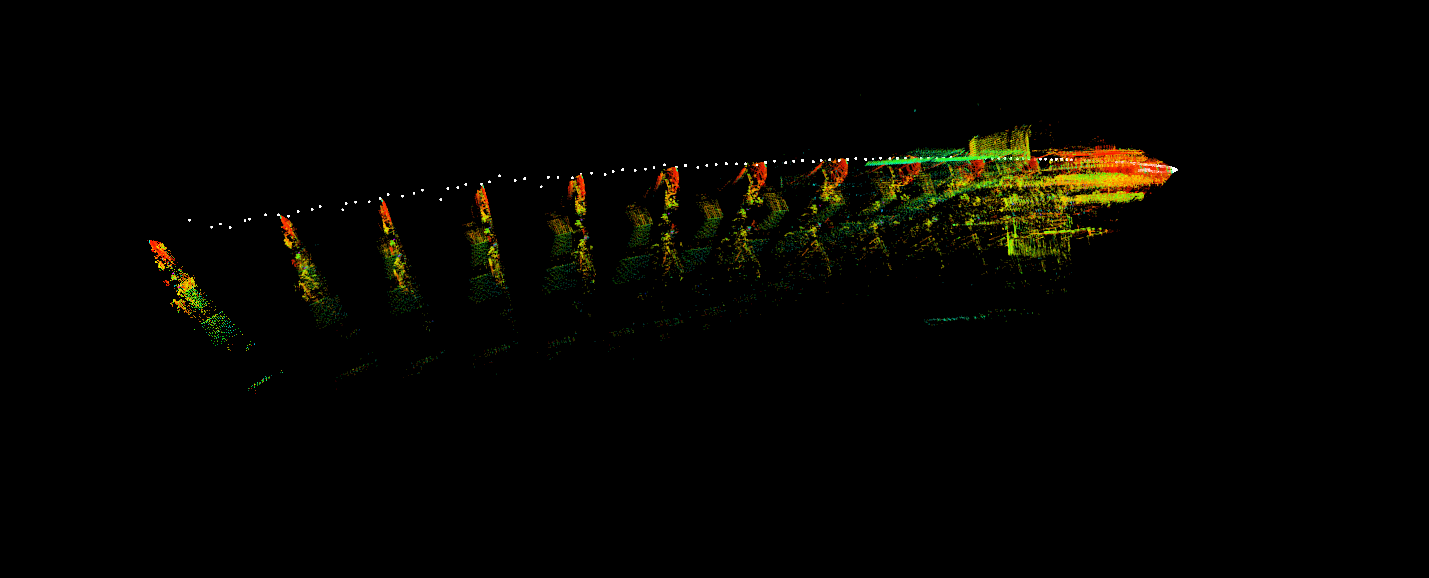

I am back again for help ^_^. I kept trying the internal IMU data with Madgwick Filter, yet haven't succeeded on my own dataset. Wondering where I might have done wrong, or certain parameter should be further tunned? Your suggestions are greatly appreciated. The most common symptom is after the lili-om program runs for sometime, the trajectory starts to drifts. A screenshot attached here for illustration. The rqt_graph screenshot is also included here, I recorded a small dataset using livox, with link attached here for reference, |

|

Would update about this issue I myself raised last time.

|

Thanks for your work, but how do you project the lidar map results onto the google map, by directly transfering coordinates into BLH or by other methods? Cause I have seen one only using some picture editing programs like PS to finish that job. |

|

@kamibukuro5656 I am keen to try the Horizon using the internal IMU, have you posted you changes/code so that I could give it a try? Many thanks Simon |

|

I have submitted the script needed to run LiLi-OM on the internal IMU. |

|

Many thanks for submitting this, I will give it a go! |

|

I am also seeing a timeshift between the internal imu and the external imu (good visible at angular_velocity/y in plot below), which could be catastrophic for the filter. Is this also anywhere compensated for? However, I am also not sure if rqt_plot uses the msg header timestamps or system time for the x-axis, so this could also just be due to my setup. |

|

I think this question helps me a lot to deploy this project with internal imu.And if anyone wants to use internal imu,remember set x、y acc and angular velocity to negative. |

Hello.

Thank you for publishing your excellent work.

Now, I have a question about IMU.

I'm trying to see if it is possible to run LiLi-OM using only the internal IMU of Horizon.

Have you ever tried to run LiLi-OM with only the internal IMU?

I have calculated the Orientation by using the Madgwick Filter and inputting it, as shown below.

This attempt worked well in the case of the "FR-IOSB-Long.bag".

However, it did not work with "2020_open_road.bag", which is available in the official Livox Loam repository.

(https://terra-1-g.djicdn.com/65c028cd298f4669a7f0e40e50ba1131/demo/2020_open_road.bag)

Can these problems be solved by parameter tuning?

Or is the built-in IMU and Madgwick Filter not accurate enough?

I would appreciate any advice you can give me.

The text was updated successfully, but these errors were encountered: