diff --git a/.bumpversion.cfg b/.bumpversion.cfg

new file mode 100644

index 00000000..9ed827f8

--- /dev/null

+++ b/.bumpversion.cfg

@@ -0,0 +1,5 @@

+[bumpversion]

+current_version = 0.3.1

+files = SpiderKeeper/__init__.py

+commit = True

+

diff --git a/CHANGELOG.md b/CHANGELOG.md

index c88d5327..583a1c69 100644

--- a/CHANGELOG.md

+++ b/CHANGELOG.md

@@ -1,34 +1,16 @@

-# SpiderKeeper Changelog

-## 1.2.0 (2017-07-24)

-- support chose server manually

-- support set cron exp manually

-- fix log Chinese decode problem

-- fix scheduler trigger not fire problem

-- fix not delete project on scrapyd problem

+# SpiderKeeper-2 Changelog

-## 1.1.0 (2017-04-25)

-- support basic auth

-- show spider crawl time info (last_runtime,avg_runtime)

-- optimized for mobile

+## 0.3.0 (2018-09-14)

+- spiderkeepr was integrated into scrapyd service

-## 1.0.3 (2017-04-17)

-- support view log

+## 0.2.5 (2018-09-14)

+- refactoring flask application

-## 1.0.0 (2017-03-30)

-- refactor

-- support py3

-- optimized api

-- optimized scheduler

-- more scalable (can support access multiply spider service)

-- show running stats

-

-## 0.2.0 (2016-04-13)

-- support view job of multi daemons.

-- support run on multi daemons.

-- support choice running daemon automaticaly.

-

-## 0.1.1 (2016-02-16)

-- add status monitor(https://github.com/afaqurk/linux-dash)

-

-## 0.1.0 (2016-01-18)

-- initial.

\ No newline at end of file

+## 0.2.0 (2017-10-26)

+- SpiderKeeper was forked to Spiderkeeper-2

+- Add button for removing all periodic jobs

+- All tasks show stats now.

+- When you run spiderkeeper under wsgi you should not use background scheduler, [see issue](https://github.com/agronholm/apscheduler/issues/160), you should run scheduler in separated process. So, scheduler was separated to own module

+- Add foreign constraints to models.

+- No need to create project now, all projects will be synchronized automatically with scrapyd.

+- Fix bugs.

\ No newline at end of file

diff --git a/README.md b/README.md

index 52c81f11..adc6549b 100644

--- a/README.md

+++ b/README.md

@@ -1,8 +1,9 @@

-# SpiderKeeper

+# SpiderKeeper-2

+#### This is a fork of [SpiderKeeper](https://github.com/DormyMo/SpiderKeeper). See [changelog](https://github.com/kalombos/SpiderKeeper/blob/master/CHANGELOG.md) for new features

-[](https://pypi.python.org/pypi/SpiderKeeper)

-[](https://pypi.python.org/pypi/SpiderKeeper)

-[](https://github.com/DormyMo/SpiderKeeper/blob/master/LICENSE)

+[](https://pypi.python.org/pypi/SpiderKeeper-2)

+[](https://pypi.python.org/pypi/SpiderKeeper-2)

+

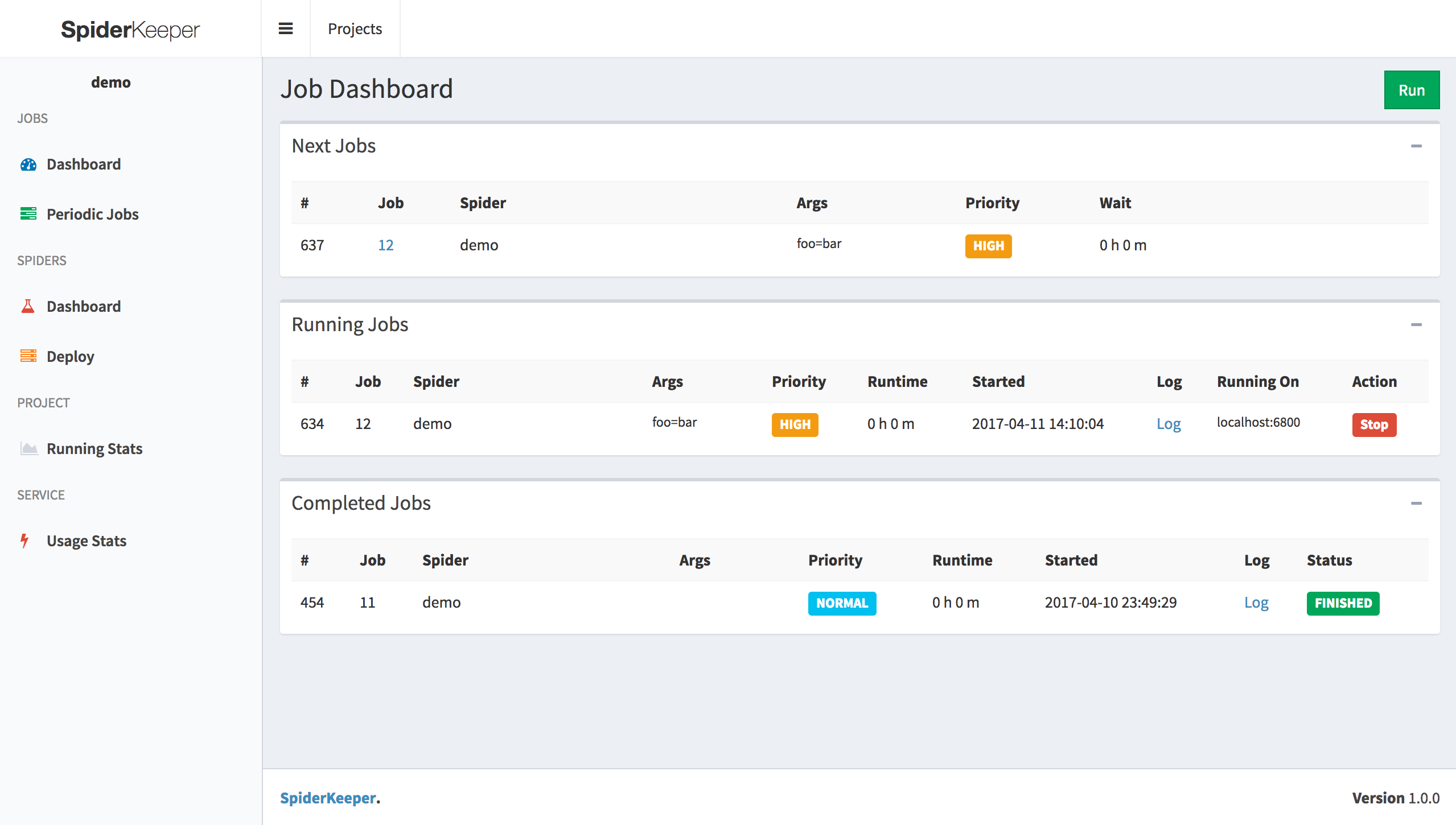

A scalable admin ui for spider service

@@ -12,10 +13,10 @@ A scalable admin ui for spider service

- With a single click deploy the scrapy project

- Show spider running stats

- Provide api

+- Integrated in scrapyd

+

-Current Support spider service

-- [Scrapy](https://github.com/scrapy/scrapy) ( with [scrapyd](https://github.com/scrapy/scrapyd))

## Screenshot

@@ -29,83 +30,38 @@ Current Support spider service

```

-pip install spiderkeeper

+pip install spiderkeeper-2

```

-### Deployment

-

-```

-

-spiderkeeper [options]

-

-Options:

-

- -h, --help show this help message and exit

- --host=HOST host, default:0.0.0.0

- --port=PORT port, default:5000

- --username=USERNAME basic auth username ,default: admin

- --password=PASSWORD basic auth password ,default: admin

- --type=SERVER_TYPE access spider server type, default: scrapyd

- --server=SERVERS servers, default: ['http://localhost:6800']

- --database-url=DATABASE_URL

- SpiderKeeper metadata database default: sqlite:////home/souche/SpiderKeeper.db

- --no-auth disable basic auth

- -v, --verbose log level

-

-

-example:

-

-spiderkeeper --server=http://localhost:6800

-

-```

## Usage

-```

-Visit:

-

-- web ui : http://localhost:5000

-1. Create Project

-2. Use [scrapyd-client](https://github.com/scrapy/scrapyd-client) to generate egg file

+1. Run ```spiderkeeper```

- scrapyd-deploy --build-egg output.egg

+2. Visit http://localhost:5000/

-2. upload egg file (make sure you started scrapyd server)

+3. upload egg file (make sure you started scrapyd server)

-3. Done & Enjoy it

+4. Done & Enjoy it

- api swagger: http://localhost:5000/api.html

-```

-

-## TODO

-- [ ] Job dashboard support filter

-- [x] User Authentication

-- [ ] Collect & Show scrapy crawl stats

-- [ ] Optimize load balancing

-

-## Versioning

-

-We use [SemVer](http://semver.org/) for versioning. For the versions available, see the [tags on this repository](https://github.com/DormyMo/SpiderKeeper/tags).

+```

## Authors

- *Initial work* - [DormyMo](https://github.com/DormyMo)

+- *Fork author* - [kalombo](https://github.com/kalombos/)

-See also the list of [contributors](https://github.com/DormyMo/SpiderKeeper/contributors) who participated in this project.

## License

-This project is licensed under the MIT License - see the [LICENSE.md](LICENSE.md) file for details

+This project is licensed under the MIT License.

## Contributing

Contributions are welcomed!

-## 交流反馈

-

-## 捐赠

-

diff --git a/SpiderKeeper/__init__.py b/SpiderKeeper/__init__.py

index 164a18e9..11df62e9 100644

--- a/SpiderKeeper/__init__.py

+++ b/SpiderKeeper/__init__.py

@@ -1,2 +1,2 @@

-__version__ = '1.2.0'

-__author__ = 'Dormy Mo'

+__version__ = '0.3.1'

+__author__ = 'kalombo'

diff --git a/SpiderKeeper/app/__init__.py b/SpiderKeeper/app/__init__.py

index 8a2ead4d..544321b8 100644

--- a/SpiderKeeper/app/__init__.py

+++ b/SpiderKeeper/app/__init__.py

@@ -1,129 +1,96 @@

-# Import flask and template operators

-import logging

+import datetime

import traceback

-

-import apscheduler

-from apscheduler.schedulers.background import BackgroundScheduler

-from flask import Flask

-from flask import jsonify

+from flask import Flask, session, jsonify

from flask_basicauth import BasicAuth

-from flask_restful import Api

-from flask_restful_swagger import swagger

-from flask_sqlalchemy import SQLAlchemy

from werkzeug.exceptions import HTTPException

import SpiderKeeper

-from SpiderKeeper import config

-

-# Define the WSGI application object

-app = Flask(__name__)

-# Configurations

-app.config.from_object(config)

-

-# Logging

-log = logging.getLogger('werkzeug')

-log.setLevel(logging.ERROR)

-formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

-handler = logging.StreamHandler()

-handler.setFormatter(formatter)

-app.logger.setLevel(app.config.get('LOG_LEVEL', "INFO"))

-app.logger.addHandler(handler)

-

-# swagger

-api = swagger.docs(Api(app), apiVersion=SpiderKeeper.__version__, api_spec_url="/api",

- description='SpiderKeeper')

-# Define the database object which is imported

-# by modules and controllers

-db = SQLAlchemy(app, session_options=dict(autocommit=False, autoflush=True))

-

-

-@app.teardown_request

-def teardown_request(exception):

- if exception:

- db.session.rollback()

- db.session.remove()

- db.session.remove()

-

-# Define apscheduler

-scheduler = BackgroundScheduler()

-

-

-class Base(db.Model):

- __abstract__ = True

-

- id = db.Column(db.Integer, primary_key=True)

- date_created = db.Column(db.DateTime, default=db.func.current_timestamp())

- date_modified = db.Column(db.DateTime, default=db.func.current_timestamp(),

- onupdate=db.func.current_timestamp())

-

-

-# Sample HTTP error handling

-# @app.errorhandler(404)

-# def not_found(error):

-# abort(404)

-

-

-@app.errorhandler(Exception)

-def handle_error(e):

- code = 500

- if isinstance(e, HTTPException):

- code = e.code

- app.logger.error(traceback.print_exc())

- return jsonify({

- 'code': code,

- 'success': False,

- 'msg': str(e),

- 'data': None

- })

-

-

-# Build the database:

-from SpiderKeeper.app.spider.model import *

-

-

-def init_database():

- db.init_app(app)

- db.create_all()

-

-

-# regist spider service proxy

-from SpiderKeeper.app.proxy.spiderctrl import SpiderAgent

+from SpiderKeeper.app.blueprints.dashboard.views import dashboard_bp

+from SpiderKeeper.app.blueprints.dashboard.api import api

+from SpiderKeeper.app.blueprints.dashboard.model import Project, SpiderInstance

+from SpiderKeeper.app.proxy import agent

from SpiderKeeper.app.proxy.contrib.scrapy import ScrapydProxy

+from SpiderKeeper.app.extensions.sqlalchemy import db

-agent = SpiderAgent()

-

-def regist_server():

+def register_server(app):

if app.config.get('SERVER_TYPE') == 'scrapyd':

for server in app.config.get("SERVERS"):

agent.regist(ScrapydProxy(server))

-from SpiderKeeper.app.spider.controller import api_spider_bp

-

-# Register blueprint(s)

-app.register_blueprint(api_spider_bp)

-

-# start sync job status scheduler

-from SpiderKeeper.app.schedulers.common import sync_job_execution_status_job, sync_spiders, \

- reload_runnable_spider_job_execution

-

-scheduler.add_job(sync_job_execution_status_job, 'interval', seconds=5, id='sys_sync_status')

-scheduler.add_job(sync_spiders, 'interval', seconds=10, id='sys_sync_spiders')

-scheduler.add_job(reload_runnable_spider_job_execution, 'interval', seconds=30, id='sys_reload_job')

-

-

-def start_scheduler():

- scheduler.start()

-

-

-def init_basic_auth():

+def init_basic_auth(app):

if not app.config.get('NO_AUTH'):

- basic_auth = BasicAuth(app)

+ BasicAuth(app)

-def initialize():

- init_database()

- regist_server()

- start_scheduler()

- init_basic_auth()

+def init_database(app):

+ db.init_app(app)

+ with app.app_context():

+ # Extensions like Flask-SQLAlchemy now know what the "current" app

+ # is while within this block. Therefore, you can now run........

+ db.create_all()

+

+

+def register_extensions(app):

+ init_database(app)

+ init_basic_auth(app)

+ api.init_app(app)

+

+

+def register_blueprints(app):

+ # Register blueprint(s)

+ app.register_blueprint(dashboard_bp)

+

+

+def create_flask_application(config):

+ # Define the WSGI application object

+ app = Flask(__name__)

+ # Configurations

+ app.config.from_object(config)

+ app.jinja_env.globals['sk_version'] = SpiderKeeper.__version__

+ register_extensions(app)

+ register_blueprints(app)

+ register_server(app)

+

+ @app.context_processor

+ def inject_common():

+ return dict(now=datetime.datetime.now(),

+ servers=agent.servers)

+

+ @app.context_processor

+ def inject_project():

+ project_context = {}

+ project = None

+ projects = Project.query.all()

+ project_context['project_list'] = projects

+ if projects:

+ project = projects[0]

+

+ project_id = session.get('project_id')

+ if isinstance(project_id, int):

+ project = Project.query.get(project_id) or project

+

+ if project:

+ session['project_id'] = project.id

+ project_context['project'] = project

+ project_context['spider_list'] = [

+ spider_instance.to_dict() for spider_instance in

+ SpiderInstance.query.filter_by(project_id=project.id).all()]

+ else:

+ project_context['project'] = {}

+ return project_context

+

+ @app.errorhandler(Exception)

+ def handle_error(e):

+ code = 500

+ if isinstance(e, HTTPException):

+ code = e.code

+ app.logger.error(traceback.print_exc())

+ return jsonify({

+ 'code': code,

+ 'success': False,

+ 'msg': str(e),

+ 'data': None

+ })

+ return app

diff --git a/SpiderKeeper/app/schedulers/__init__.py b/SpiderKeeper/app/blueprints/__init__.py

similarity index 100%

rename from SpiderKeeper/app/schedulers/__init__.py

rename to SpiderKeeper/app/blueprints/__init__.py

diff --git a/SpiderKeeper/app/spider/__init__.py b/SpiderKeeper/app/blueprints/dashboard/__init__.py

similarity index 100%

rename from SpiderKeeper/app/spider/__init__.py

rename to SpiderKeeper/app/blueprints/dashboard/__init__.py

diff --git a/SpiderKeeper/app/spider/controller.py b/SpiderKeeper/app/blueprints/dashboard/api.py

similarity index 60%

rename from SpiderKeeper/app/spider/controller.py

rename to SpiderKeeper/app/blueprints/dashboard/api.py

index 592b2768..20a7a839 100644

--- a/SpiderKeeper/app/spider/controller.py

+++ b/SpiderKeeper/app/blueprints/dashboard/api.py

@@ -1,26 +1,19 @@

-import datetime

-import os

-import tempfile

-

+# -*- coding: utf-8 -*-

import flask_restful

-import requests

-from flask import Blueprint, request

-from flask import abort

-from flask import flash

-from flask import redirect

-from flask import render_template

-from flask import session

from flask_restful_swagger import swagger

-from werkzeug.utils import secure_filename

-

-from SpiderKeeper.app import db, api, agent, app

-from SpiderKeeper.app.spider.model import JobInstance, Project, JobExecution, SpiderInstance, JobRunType

+from flask import request, abort

+from flask_restful import Api

+import SpiderKeeper

+from SpiderKeeper.app.proxy import agent

+from SpiderKeeper.app.extensions.sqlalchemy import db

+from SpiderKeeper.app.blueprints.dashboard.model import JobInstance, Project, JobExecution, \

+ SpiderInstance, JobRunType

-api_spider_bp = Blueprint('spider', __name__)

-'''

-========= api =========

-'''

+api = swagger.docs(

+ Api(), apiVersion=SpiderKeeper.__version__,

+ api_spec_url="/api", description='SpiderKeeper'

+)

class ProjectCtrl(flask_restful.Resource):

@@ -47,6 +40,8 @@ def post(self):

db.session.commit()

return project.to_dict()

+api.add_resource(ProjectCtrl, "/api/projects")

+

class SpiderCtrl(flask_restful.Resource):

@swagger.operation(

@@ -59,10 +54,11 @@ class SpiderCtrl(flask_restful.Resource):

"dataType": 'int'

}])

def get(self, project_id):

- project = Project.find_project_by_id(project_id)

return [spider_instance.to_dict() for spider_instance in

SpiderInstance.query.filter_by(project_id=project_id).all()]

+api.add_resource(SpiderCtrl, "/api/projects/<project_id>/spiders")

+

class SpiderDetailCtrl(flask_restful.Resource):

@swagger.operation(

@@ -140,6 +136,8 @@ def put(self, project_id, spider_id):

agent.start_spider(job_instance)

return True

+api.add_resource(SpiderDetailCtrl, "/api/projects/<project_id>/spiders/<spider_id>")

+

JOB_INSTANCE_FIELDS = [column.name for column in JobInstance.__table__.columns]

JOB_INSTANCE_FIELDS.remove('id')

@@ -258,6 +256,8 @@ def post(self, project_id):

db.session.commit()

return True

+api.add_resource(JobCtrl, "/api/projects/<project_id>/jobs")

+

class JobDetailCtrl(flask_restful.Resource):

@swagger.operation(

@@ -376,6 +376,8 @@ def put(self, project_id, job_id):

agent.start_spider(job_instance)

return True

+api.add_resource(JobDetailCtrl, "/api/projects/<project_id>/jobs/<job_id>")

+

class JobExecutionCtrl(flask_restful.Resource):

@swagger.operation(

@@ -390,6 +392,8 @@ class JobExecutionCtrl(flask_restful.Resource):

def get(self, project_id):

return JobExecution.list_jobs(project_id)

+api.add_resource(JobExecutionCtrl, "/api/projects/<project_id>/jobexecs")

+

class JobExecutionDetailCtrl(flask_restful.Resource):

@swagger.operation(

@@ -417,250 +421,4 @@ def put(self, project_id, job_exec_id):

agent.cancel_spider(job_execution)

return True

-

-api.add_resource(ProjectCtrl, "/api/projects")

-api.add_resource(SpiderCtrl, "/api/projects/<project_id>/spiders")

-api.add_resource(SpiderDetailCtrl, "/api/projects/<project_id>/spiders/<spider_id>")

-api.add_resource(JobCtrl, "/api/projects/<project_id>/jobs")

-api.add_resource(JobDetailCtrl, "/api/projects/<project_id>/jobs/<job_id>")

-api.add_resource(JobExecutionCtrl, "/api/projects/<project_id>/jobexecs")

api.add_resource(JobExecutionDetailCtrl, "/api/projects/<project_id>/jobexecs/<job_exec_id>")

-

-'''

-========= Router =========

-'''

-

-

-@app.before_request

-def intercept_no_project():

- if request.path.find('/project//') > -1:

- flash("create project first")

- return redirect("/project/manage", code=302)

-

-

-@app.context_processor

-def inject_common():

- return dict(now=datetime.datetime.now(),

- servers=agent.servers)

-

-

-@app.context_processor

-def inject_project():

- project_context = {}

- project_context['project_list'] = Project.query.all()

- if project_context['project_list'] and (not session.get('project_id')):

- project = Project.query.first()

- session['project_id'] = project.id

- if session.get('project_id'):

- project_context['project'] = Project.find_project_by_id(session['project_id'])

- project_context['spider_list'] = [spider_instance.to_dict() for spider_instance in

- SpiderInstance.query.filter_by(project_id=session['project_id']).all()]

- else:

- project_context['project'] = {}

- return project_context

-

-

-@app.context_processor

-def utility_processor():

- def timedelta(end_time, start_time):

- '''

-

- :param end_time:

- :param start_time:

- :param unit: s m h

- :return:

- '''

- if not end_time or not start_time:

- return ''

- if type(end_time) == str:

- end_time = datetime.datetime.strptime(end_time, '%Y-%m-%d %H:%M:%S')

- if type(start_time) == str:

- start_time = datetime.datetime.strptime(start_time, '%Y-%m-%d %H:%M:%S')

- total_seconds = (end_time - start_time).total_seconds()

- return readable_time(total_seconds)

-

- def readable_time(total_seconds):

- if not total_seconds:

- return '-'

- if total_seconds / 60 == 0:

- return '%s s' % total_seconds

- if total_seconds / 3600 == 0:

- return '%s m' % int(total_seconds / 60)

- return '%s h %s m' % (int(total_seconds / 3600), int((total_seconds % 3600) / 60))

-

- return dict(timedelta=timedelta, readable_time=readable_time)

-

-

-@app.route("/")

-def index():

- project = Project.query.first()

- if project:

- return redirect("/project/%s/job/dashboard" % project.id, code=302)

- return redirect("/project/manage", code=302)

-

-

-@app.route("/project/<project_id>")

-def project_index(project_id):

- session['project_id'] = project_id

- return redirect("/project/%s/job/dashboard" % project_id, code=302)

-

-

-@app.route("/project/create", methods=['post'])

-def project_create():

- project_name = request.form['project_name']

- project = Project()

- project.project_name = project_name

- db.session.add(project)

- db.session.commit()

- return redirect("/project/%s/spider/deploy" % project.id, code=302)

-

-

-@app.route("/project/<project_id>/delete")

-def project_delete(project_id):

- project = Project.find_project_by_id(project_id)

- agent.delete_project(project)

- db.session.delete(project)

- db.session.commit()

- return redirect("/project/manage", code=302)

-

-

-@app.route("/project/manage")

-def project_manage():

- return render_template("project_manage.html")

-

-

-@app.route("/project/<project_id>/job/dashboard")

-def job_dashboard(project_id):

- return render_template("job_dashboard.html", job_status=JobExecution.list_jobs(project_id))

-

-

-@app.route("/project/<project_id>/job/periodic")

-def job_periodic(project_id):

- project = Project.find_project_by_id(project_id)

- job_instance_list = [job_instance.to_dict() for job_instance in

- JobInstance.query.filter_by(run_type="periodic", project_id=project_id).all()]

- return render_template("job_periodic.html",

- job_instance_list=job_instance_list)

-

-

-@app.route("/project/<project_id>/job/add", methods=['post'])

-def job_add(project_id):

- project = Project.find_project_by_id(project_id)

- job_instance = JobInstance()

- job_instance.spider_name = request.form['spider_name']

- job_instance.project_id = project_id

- job_instance.spider_arguments = request.form['spider_arguments']

- job_instance.priority = request.form.get('priority', 0)

- job_instance.run_type = request.form['run_type']

- # chose daemon manually

- if request.form['daemon'] != 'auto':

- spider_args = []

- if request.form['spider_arguments']:

- spider_args = request.form['spider_arguments'].split(",")

- spider_args.append("daemon={}".format(request.form['daemon']))

- job_instance.spider_arguments = ','.join(spider_args)

- if job_instance.run_type == JobRunType.ONETIME:

- job_instance.enabled = -1

- db.session.add(job_instance)

- db.session.commit()

- agent.start_spider(job_instance)

- if job_instance.run_type == JobRunType.PERIODIC:

- job_instance.cron_minutes = request.form.get('cron_minutes') or '0'

- job_instance.cron_hour = request.form.get('cron_hour') or '*'

- job_instance.cron_day_of_month = request.form.get('cron_day_of_month') or '*'

- job_instance.cron_day_of_week = request.form.get('cron_day_of_week') or '*'

- job_instance.cron_month = request.form.get('cron_month') or '*'

- # set cron exp manually

- if request.form.get('cron_exp'):

- job_instance.cron_minutes, job_instance.cron_hour, job_instance.cron_day_of_month, job_instance.cron_day_of_week, job_instance.cron_month = \

- request.form['cron_exp'].split(' ')

- db.session.add(job_instance)

- db.session.commit()

- return redirect(request.referrer, code=302)

-

-

-@app.route("/project/<project_id>/jobexecs/<job_exec_id>/stop")

-def job_stop(project_id, job_exec_id):

- job_execution = JobExecution.query.filter_by(project_id=project_id, id=job_exec_id).first()

- agent.cancel_spider(job_execution)

- return redirect(request.referrer, code=302)

-

-

-@app.route("/project/<project_id>/jobexecs/<job_exec_id>/log")

-def job_log(project_id, job_exec_id):

- job_execution = JobExecution.query.filter_by(project_id=project_id, id=job_exec_id).first()

- res = requests.get(agent.log_url(job_execution))

- res.encoding = 'utf8'

- raw = res.text

- return render_template("job_log.html", log_lines=raw.split('\n'))

-

-

-@app.route("/project/<project_id>/job/<job_instance_id>/run")

-def job_run(project_id, job_instance_id):

- job_instance = JobInstance.query.filter_by(project_id=project_id, id=job_instance_id).first()

- agent.start_spider(job_instance)

- return redirect(request.referrer, code=302)

-

-

-@app.route("/project/<project_id>/job/<job_instance_id>/remove")

-def job_remove(project_id, job_instance_id):

- job_instance = JobInstance.query.filter_by(project_id=project_id, id=job_instance_id).first()

- db.session.delete(job_instance)

- db.session.commit()

- return redirect(request.referrer, code=302)

-

-

-@app.route("/project/<project_id>/job/<job_instance_id>/switch")

-def job_switch(project_id, job_instance_id):

- job_instance = JobInstance.query.filter_by(project_id=project_id, id=job_instance_id).first()

- job_instance.enabled = -1 if job_instance.enabled == 0 else 0

- db.session.commit()

- return redirect(request.referrer, code=302)

-

-

-@app.route("/project/<project_id>/spider/dashboard")

-def spider_dashboard(project_id):

- spider_instance_list = SpiderInstance.list_spiders(project_id)

- return render_template("spider_dashboard.html",

- spider_instance_list=spider_instance_list)

-

-

-@app.route("/project/<project_id>/spider/deploy")

-def spider_deploy(project_id):

- project = Project.find_project_by_id(project_id)

- return render_template("spider_deploy.html")

-

-

-@app.route("/project/<project_id>/spider/upload", methods=['post'])

-def spider_egg_upload(project_id):

- project = Project.find_project_by_id(project_id)

- if 'file' not in request.files:

- flash('No file part')

- return redirect(request.referrer)

- file = request.files['file']

- # if user does not select file, browser also

- # submit a empty part without filename

- if file.filename == '':

- flash('No selected file')

- return redirect(request.referrer)

- if file:

- filename = secure_filename(file.filename)

- dst = os.path.join(tempfile.gettempdir(), filename)

- file.save(dst)

- agent.deploy(project, dst)

- flash('deploy success!')

- return redirect(request.referrer)

-

-

-@app.route("/project/<project_id>/project/stats")

-def project_stats(project_id):

- project = Project.find_project_by_id(project_id)

- run_stats = JobExecution.list_run_stats_by_hours(project_id)

- return render_template("project_stats.html", run_stats=run_stats)

-

-

-@app.route("/project/<project_id>/server/stats")

-def service_stats(project_id):

- project = Project.find_project_by_id(project_id)

- run_stats = JobExecution.list_run_stats_by_hours(project_id)

- return render_template("server_stats.html", run_stats=run_stats)

diff --git a/SpiderKeeper/app/spider/model.py b/SpiderKeeper/app/blueprints/dashboard/model.py

similarity index 71%

rename from SpiderKeeper/app/spider/model.py

rename to SpiderKeeper/app/blueprints/dashboard/model.py

index 5376602b..7d39bd9b 100644

--- a/SpiderKeeper/app/spider/model.py

+++ b/SpiderKeeper/app/blueprints/dashboard/model.py

@@ -1,6 +1,9 @@

import datetime

+import demjson

+import re

from sqlalchemy import desc

-from SpiderKeeper.app import db, Base

+from sqlalchemy.orm import relation

+from SpiderKeeper.app.extensions.sqlalchemy import db, Base

class Project(Base):

@@ -16,10 +19,6 @@ def load_project(cls, project_list):

db.session.add(project)

db.session.commit()

- @classmethod

- def find_project_by_id(cls, project_id):

- return Project.query.filter_by(id=project_id).first()

-

def to_dict(self):

return {

"project_id": self.id,

@@ -31,13 +30,19 @@ class SpiderInstance(Base):

__tablename__ = 'sk_spider'

spider_name = db.Column(db.String(100))

- project_id = db.Column(db.INTEGER, nullable=False, index=True)

+

+ project_id = db.Column(

+ db.Integer, db.ForeignKey('sk_project.id', ondelete='CASCADE'), nullable=False,

+ index=True

+ )

+ project = relation(Project)

@classmethod

def update_spider_instances(cls, project_id, spider_instance_list):

for spider_instance in spider_instance_list:

- existed_spider_instance = cls.query.filter_by(project_id=project_id,

- spider_name=spider_instance.spider_name).first()

+ existed_spider_instance = cls.query.filter_by(

+ project_id=project_id, spider_name=spider_instance.spider_name

+ ).first()

if not existed_spider_instance:

db.session.add(spider_instance)

db.session.commit()

@@ -60,41 +65,12 @@ def to_dict(self):

spider_name=self.spider_name,

project_id=self.project_id)

- @classmethod

- def list_spiders(cls, project_id):

- sql_last_runtime = '''

- select * from (select a.spider_name,b.date_created from sk_job_instance as a

- left join sk_job_execution as b

- on a.id = b.job_instance_id

- order by b.date_created desc) as c

- group by c.spider_name

- '''

- sql_avg_runtime = '''

- select a.spider_name,avg(end_time-start_time) from sk_job_instance as a

- left join sk_job_execution as b

- on a.id = b.job_instance_id

- where b.end_time is not null

- group by a.spider_name

- '''

- last_runtime_list = dict(

- (spider_name, last_run_time) for spider_name, last_run_time in db.engine.execute(sql_last_runtime))

- avg_runtime_list = dict(

- (spider_name, avg_run_time) for spider_name, avg_run_time in db.engine.execute(sql_avg_runtime))

- res = []

- for spider in cls.query.filter_by(project_id=project_id).all():

- last_runtime = last_runtime_list.get(spider.spider_name)

- res.append(dict(spider.to_dict(),

- **{'spider_last_runtime': last_runtime if last_runtime else '-',

- 'spider_avg_runtime': avg_runtime_list.get(spider.spider_name)

- }))

- return res

-

-class JobPriority():

+class JobPriority:

LOW, NORMAL, HIGH, HIGHEST = range(-1, 3)

-class JobRunType():

+class JobRunType:

ONETIME = 'onetime'

PERIODIC = 'periodic'

@@ -103,22 +79,28 @@ class JobInstance(Base):

__tablename__ = 'sk_job_instance'

spider_name = db.Column(db.String(100), nullable=False, index=True)

- project_id = db.Column(db.INTEGER, nullable=False, index=True)

+ project_id = db.Column(

+ db.Integer, db.ForeignKey('sk_project.id', ondelete='CASCADE'), nullable=False,

+ index=True

+ )

+ project = relation(Project)

+

tags = db.Column(db.Text) # job tag(split by , )

spider_arguments = db.Column(db.Text) # job execute arguments(split by , ex.: arg1=foo,arg2=bar)

- priority = db.Column(db.INTEGER)

+ priority = db.Column(db.Integer)

desc = db.Column(db.Text)

cron_minutes = db.Column(db.String(20), default="0")

cron_hour = db.Column(db.String(20), default="*")

cron_day_of_month = db.Column(db.String(20), default="*")

cron_day_of_week = db.Column(db.String(20), default="*")

cron_month = db.Column(db.String(20), default="*")

- enabled = db.Column(db.INTEGER, default=0) # 0/-1

+ enabled = db.Column(db.Integer, default=0) # 0/-1

run_type = db.Column(db.String(20)) # periodic/onetime

def to_dict(self):

return dict(

job_instance_id=self.id,

+ project_id=self.project_id,

spider_name=self.spider_name,

tags=self.tags.split(',') if self.tags else None,

spider_arguments=self.spider_arguments,

@@ -138,29 +120,52 @@ def to_dict(self):

def list_job_instance_by_project_id(cls, project_id):

return cls.query.filter_by(project_id=project_id).all()

- @classmethod

- def find_job_instance_by_id(cls, job_instance_id):

- return cls.query.filter_by(id=job_instance_id).first()

-

-class SpiderStatus():

+class SpiderStatus:

PENDING, RUNNING, FINISHED, CANCELED = range(4)

class JobExecution(Base):

__tablename__ = 'sk_job_execution'

- project_id = db.Column(db.INTEGER, nullable=False, index=True)

+ # Useless field, that should be removed

+ project_id = db.Column(db.Integer, nullable=False, index=True)

service_job_execution_id = db.Column(db.String(50), nullable=False, index=True)

- job_instance_id = db.Column(db.INTEGER, nullable=False, index=True)

- create_time = db.Column(db.DATETIME)

- start_time = db.Column(db.DATETIME)

- end_time = db.Column(db.DATETIME)

- running_status = db.Column(db.INTEGER, default=SpiderStatus.PENDING)

+ job_instance_id = db.Column(

+ db.Integer, db.ForeignKey('sk_job_instance.id', ondelete='CASCADE'), nullable=False,

+ index=True

+ )

+ job_instance = relation(JobInstance)

+ create_time = db.Column(db.DateTime)

+ start_time = db.Column(db.DateTime)

+ end_time = db.Column(db.DateTime)

+ running_status = db.Column(db.Integer, default=SpiderStatus.PENDING)

running_on = db.Column(db.Text)

+ raw_stats = db.Column(db.Text)

+ items_count = db.Column(db.Integer)

+ warnings_count = db.Column(db.Integer)

+ errors_count = db.Column(db.Integer)

+

+ RAW_STATS_REGEX = '\[scrapy\.statscollectors\][^{]+({[^}]+})'

+

+ def process_raw_stats(self):

+ if self.raw_stats is None:

+ return

+ datetime_regex = '(datetime\.datetime\([^)]+\))'

+ self.raw_stats = re.sub(datetime_regex, r"'\1'", self.raw_stats)

+ stats = demjson.decode(self.raw_stats)

+ self.items_count = stats.get('item_scraped_count') or 0

+ self.warnings_count = stats.get('log_count/WARNING') or 0

+ self.errors_count = stats.get('log_count/ERROR') or 0

+

+ def has_warnings(self):

+ return not self.raw_stats or not self.items_count or self.warnings_count

+

+ def has_errors(self):

+ return bool(self.errors_count)

+

def to_dict(self):

- job_instance = JobInstance.query.filter_by(id=self.job_instance_id).first()

return {

'project_id': self.project_id,

'job_execution_id': self.id,

@@ -171,7 +176,12 @@ def to_dict(self):

'end_time': self.end_time.strftime('%Y-%m-%d %H:%M:%S') if self.end_time else None,

'running_status': self.running_status,

'running_on': self.running_on,

- 'job_instance': job_instance.to_dict() if job_instance else {}

+ 'job_instance': self.job_instance,

+ 'has_warnings': self.has_warnings(),

+ 'has_errors': self.has_errors(),

+ 'items_count': self.items_count if self.items_count is not None else '-',

+ 'warnings_count': self.warnings_count if self.warnings_count is not None else '-',

+ 'errors_count': self.errors_count if self.errors_count is not None else '-'

}

@classmethod

diff --git a/SpiderKeeper/app/blueprints/dashboard/views.py b/SpiderKeeper/app/blueprints/dashboard/views.py

new file mode 100644

index 00000000..878a917a

--- /dev/null

+++ b/SpiderKeeper/app/blueprints/dashboard/views.py

@@ -0,0 +1,263 @@

+import datetime

+import os

+import tempfile

+

+

+import requests

+from flask import Blueprint, request, Response

+from flask import url_for

+from flask import flash

+from flask import redirect

+from flask import render_template

+from flask import session

+from sqlalchemy import func

+from werkzeug.utils import secure_filename

+

+from SpiderKeeper.app.proxy import agent

+from SpiderKeeper.app.extensions.sqlalchemy import db

+from SpiderKeeper.app.blueprints.dashboard.model import JobInstance, Project, JobExecution, \

+ SpiderInstance, JobRunType

+

+dashboard_bp = Blueprint('dashboard', __name__)

+

+

+@dashboard_bp.context_processor

+def utility_processor():

+ def timedelta(end_time, start_time):

+ """

+

+ :param end_time:

+ :param start_time:

+ :param unit: s m h

+ :return:

+ """

+ if not end_time or not start_time:

+ return ''

+ if type(end_time) == str:

+ end_time = datetime.datetime.strptime(end_time, '%Y-%m-%d %H:%M:%S')

+ if type(start_time) == str:

+ start_time = datetime.datetime.strptime(start_time, '%Y-%m-%d %H:%M:%S')

+ total_seconds = (end_time - start_time).total_seconds()

+ return readable_time(total_seconds)

+

+ def readable_time(total_seconds):

+ if not total_seconds:

+ return '-'

+ if total_seconds / 60 == 0:

+ return '%s s' % total_seconds

+ if total_seconds / 3600 == 0:

+ return '%s m' % int(total_seconds / 60)

+ return '%s h %s m' % (int(total_seconds / 3600), int((total_seconds % 3600) / 60))

+

+ return dict(timedelta=timedelta, readable_time=readable_time)

+

+

+@dashboard_bp.route("/")

+def index():

+ project = Project.query.first()

+ if project:

+ return redirect(url_for('dashboard.job_dashboard', project_id=project.id))

+ return render_template("index.html")

+

+

+@dashboard_bp.route("/project/<int:project_id>")

+def project_index(project_id):

+ Project.query.get_or_404(project_id)

+ session['project_id'] = project_id

+ return redirect(url_for('dashboard.job_dashboard', project_id=project_id))

+

+

+@dashboard_bp.route("/project/<int:project_id>/delete")

+def project_delete(project_id):

+ project = Project.query.get_or_404(project_id)

+ agent.delete_project(project)

+ db.session.delete(project)

+ db.session.commit()

+ return redirect(url_for('dashboard.index'))

+

+

+@dashboard_bp.route("/project/<int:project_id>/manage")

+def project_manage(project_id):

+ Project.query.get_or_404(project_id)

+ session['project_id'] = project_id

+ return render_template("project_manage.html")

+

+

+@dashboard_bp.route("/project/<int:project_id>/job/dashboard")

+def job_dashboard(project_id):

+ Project.query.get_or_404(project_id)

+ session['project_id'] = project_id

+ return render_template("job_dashboard.html", job_status=JobExecution.list_jobs(project_id))

+

+

+@dashboard_bp.route("/project/<int:project_id>/job/periodic")

+def job_periodic(project_id):

+ Project.query.get_or_404(project_id)

+ session['project_id'] = project_id

+ job_instance_list = [job_instance.to_dict() for job_instance in

+ JobInstance.query.filter_by(run_type="periodic", project_id=project_id).all()]

+ return render_template("job_periodic.html",

+ job_instance_list=job_instance_list)

+

+

+@dashboard_bp.route("/project/<int:project_id>/job/add", methods=['post'])

+def job_add(project_id):

+ Project.query.get_or_404(project_id)

+ job_instance = JobInstance()

+ job_instance.spider_name = request.form['spider_name']

+ job_instance.project_id = project_id

+ job_instance.spider_arguments = request.form['spider_arguments']

+ job_instance.priority = request.form.get('priority', 0)

+ job_instance.run_type = request.form['run_type']

+ # chose daemon manually

+ if request.form['daemon'] != 'auto':

+ spider_args = []

+ if request.form['spider_arguments']:

+ spider_args = request.form['spider_arguments'].split(",")

+ spider_args.append("daemon={}".format(request.form['daemon']))

+ job_instance.spider_arguments = ','.join(spider_args)

+ if job_instance.run_type == JobRunType.ONETIME:

+ job_instance.enabled = -1

+ db.session.add(job_instance)

+ db.session.commit()

+ agent.start_spider(job_instance)

+ if job_instance.run_type == JobRunType.PERIODIC:

+ job_instance.cron_minutes = request.form.get('cron_minutes') or '0'

+ job_instance.cron_hour = request.form.get('cron_hour') or '*'

+ job_instance.cron_day_of_month = request.form.get('cron_day_of_month') or '*'

+ job_instance.cron_day_of_week = request.form.get('cron_day_of_week') or '*'

+ job_instance.cron_month = request.form.get('cron_month') or '*'

+ # set cron exp manually

+ if request.form.get('cron_exp'):

+ job_instance.cron_minutes, job_instance.cron_hour, job_instance.cron_day_of_month, job_instance.cron_day_of_week, job_instance.cron_month = \

+ request.form['cron_exp'].split(' ')

+ db.session.add(job_instance)

+ db.session.commit()

+ return redirect(request.referrer, code=302)

+

+

+@dashboard_bp.route("/project/<int:project_id>/jobexecs/<int:job_exec_id>/stop")

+def job_stop(project_id, job_exec_id):

+ job_execution = JobExecution.query.get_or_404(job_exec_id)

+ agent.cancel_spider(job_execution)

+ return redirect(request.referrer, code=302)

+

+

+@dashboard_bp.route("/project/jobexecs/<int:job_exec_id>/log")

+def job_log(job_exec_id):

+ job_execution = JobExecution.query.get_or_404(job_exec_id)

+ res = requests.get(agent.log_url(job_execution))

+ res.encoding = 'utf8'

+ return Response(res.text, content_type='text/plain; charset=utf-8')

+

+

+@dashboard_bp.route("/project/job/<int:job_instance_id>/run")

+def job_run(job_instance_id):

+ job_instance = JobInstance.query.get_or_404(job_instance_id)

+ agent.start_spider(job_instance)

+ return redirect(request.referrer, code=302)

+

+

+@dashboard_bp.route("/job/<int:job_instance_id>/remove")

+def job_remove(job_instance_id):

+ job_instance = JobInstance.query.get_or_404(job_instance_id)

+ db.session.delete(job_instance)

+ db.session.commit()

+ return redirect(request.referrer, code=302)

+

+

+@dashboard_bp.route("/project/<int:project_id>/jobs/remove")

+def jobs_remove(project_id):

+ for job_instance in JobInstance.query.filter_by(project_id=project_id):

+ db.session.delete(job_instance)

+ db.session.commit()

+ return redirect(request.referrer, code=302)

+

+

+@dashboard_bp.route("/project/<int:project_id>/job/<int:job_instance_id>/switch")

+def job_switch(project_id, job_instance_id):

+ job_instance = JobInstance.query.get_or_404(job_instance_id)

+ job_instance.enabled = -1 if job_instance.enabled == 0 else 0

+ db.session.commit()

+ return redirect(request.referrer, code=302)

+

+

+@dashboard_bp.route("/project/<int:project_id>/spider/dashboard")

+def spider_dashboard(project_id):

+ Project.query.get_or_404(project_id)

+ session['project_id'] = project_id

+ last_runtime_query = db.session.query(

+ SpiderInstance.spider_name,

+ func.Max(JobExecution.date_created).label('last_runtime'),

+ ).outerjoin(JobInstance, JobInstance.spider_name == SpiderInstance.spider_name)\

+ .outerjoin(JobExecution).filter(SpiderInstance.project_id == project_id)\

+ .group_by(SpiderInstance.id)

+

+ last_runtime = dict(

+ (spider_name, last_runtime) for spider_name, last_runtime in last_runtime_query

+ )

+

+ avg_runtime_query = db.session.query(

+ SpiderInstance.spider_name,

+ func.Avg(JobExecution.end_time - JobExecution.start_time).label('avg_runtime'),

+ ).outerjoin(JobInstance, JobInstance.spider_name == SpiderInstance.spider_name)\

+ .outerjoin(JobExecution).filter(SpiderInstance.project_id == project_id)\

+ .filter(JobExecution.end_time != None)\

+ .group_by(SpiderInstance.id)

+

+ avg_runtime = dict(

+ (spider_name, avg_runtime) for spider_name, avg_runtime in avg_runtime_query

+ )

+

+ spiders = []

+ for spider in SpiderInstance.query.filter(SpiderInstance.project_id == project_id).all():

+ spider.last_runtime = last_runtime.get(spider.spider_name)

+ spider.avg_runtime = avg_runtime.get(spider.spider_name)

+ if spider.avg_runtime is not None:

+ spider.avg_runtime = spider.avg_runtime.total_seconds()

+ spiders.append(spider)

+ return render_template("spider_dashboard.html", spiders=spiders)

+

+

+@dashboard_bp.route("/project/<int:project_id>/spider/deploy")

+def spider_deploy(project_id):

+ Project.query.get_or_404(project_id)

+ session['project_id'] = project_id

+ return render_template("spider_deploy.html")

+

+

+@dashboard_bp.route("/project/<int:project_id>/spider/upload", methods=['post'])

+def spider_egg_upload(project_id):

+ project = Project.query.get(project_id)

+ if 'file' not in request.files:

+ flash('No file part')

+ return redirect(request.referrer)

+ file = request.files['file']

+ # if user does not select file, browser also

+ # submit a empty part without filename

+ if file.filename == '':

+ flash('No selected file')

+ return redirect(request.referrer)

+ if file:

+ filename = secure_filename(file.filename)

+ dst = os.path.join(tempfile.gettempdir(), filename)

+ file.save(dst)

+ agent.deploy(project, dst)

+ flash('deploy success!')

+ return redirect(request.referrer)

+

+

+@dashboard_bp.route("/project/<int:project_id>/project/stats")

+def project_stats(project_id):

+ Project.query.get_or_404(project_id)

+ session['project_id'] = project_id

+ run_stats = JobExecution.list_run_stats_by_hours(project_id)

+ return render_template("project_stats.html", run_stats=run_stats)

+

+

+@dashboard_bp.route("/project/<int:project_id>/server/stats")

+def service_stats(project_id):

+ Project.query.get_or_404(project_id)

+ session['project_id'] = project_id

+ run_stats = JobExecution.list_run_stats_by_hours(project_id)

+ return render_template("server_stats.html", run_stats=run_stats)

diff --git a/SpiderKeeper/config.py b/SpiderKeeper/app/config.py

similarity index 98%

rename from SpiderKeeper/config.py

rename to SpiderKeeper/app/config.py

index 8f0722d9..24558111 100644

--- a/SpiderKeeper/config.py

+++ b/SpiderKeeper/app/config.py

@@ -1,7 +1,7 @@

# Statement for enabling the development environment

import os

-DEBUG = True

+DEBUG = False

# Define the application directory

diff --git a/SpiderKeeper/app/extensions/__init__.py b/SpiderKeeper/app/extensions/__init__.py

new file mode 100644

index 00000000..40a96afc

--- /dev/null

+++ b/SpiderKeeper/app/extensions/__init__.py

@@ -0,0 +1 @@

+# -*- coding: utf-8 -*-

diff --git a/SpiderKeeper/app/extensions/sqlalchemy.py b/SpiderKeeper/app/extensions/sqlalchemy.py

new file mode 100644

index 00000000..c25c651e

--- /dev/null

+++ b/SpiderKeeper/app/extensions/sqlalchemy.py

@@ -0,0 +1,14 @@

+# -*- coding: utf-8 -*-

+

+from flask_sqlalchemy import SQLAlchemy

+

+db = SQLAlchemy()

+

+

+class Base(db.Model):

+ __abstract__ = True

+

+ id = db.Column(db.Integer, primary_key=True)

+ date_created = db.Column(db.DateTime, default=db.func.current_timestamp())

+ date_modified = db.Column(db.DateTime, default=db.func.current_timestamp(),

+ onupdate=db.func.current_timestamp())

diff --git a/SpiderKeeper/app/proxy/__init__.py b/SpiderKeeper/app/proxy/__init__.py

index e69de29b..81ea5fe0 100644

--- a/SpiderKeeper/app/proxy/__init__.py

+++ b/SpiderKeeper/app/proxy/__init__.py

@@ -0,0 +1,3 @@

+from SpiderKeeper.app.proxy.spiderctrl import SpiderAgent

+

+agent = SpiderAgent()

diff --git a/SpiderKeeper/app/proxy/contrib/scrapy.py b/SpiderKeeper/app/proxy/contrib/scrapy.py

index 9acad39e..3eab4b3b 100644

--- a/SpiderKeeper/app/proxy/contrib/scrapy.py

+++ b/SpiderKeeper/app/proxy/contrib/scrapy.py

@@ -1,9 +1,10 @@

-import datetime, time

+import datetime

+import time

import requests

from SpiderKeeper.app.proxy.spiderctrl import SpiderServiceProxy

-from SpiderKeeper.app.spider.model import SpiderStatus, Project, SpiderInstance

+from SpiderKeeper.app.blueprints.dashboard.model import SpiderStatus, Project, SpiderInstance

from SpiderKeeper.app.util.http import request

@@ -31,7 +32,9 @@ def get_project_list(self):

def delete_project(self, project_name):

post_data = dict(project=project_name)

- data = request("post", self._scrapyd_url() + "/delproject.json", data=post_data, return_type="json")

+ data = request(

+ "post", self._scrapyd_url() + "/delproject.json", data=post_data, return_type="json"

+ )

return True if data and data['status'] == 'ok' else False

def get_spider_list(self, project_name):

@@ -57,22 +60,32 @@ def get_job_list(self, project_name, spider_status=None):

for item in data[self.spider_status_name_dict[_status]]:

start_time, end_time = None, None

if item.get('start_time'):

- start_time = datetime.datetime.strptime(item['start_time'], '%Y-%m-%d %H:%M:%S.%f')

+ start_time = datetime.datetime.strptime(

+ item['start_time'], '%Y-%m-%d %H:%M:%S.%f'

+ )

if item.get('end_time'):

- end_time = datetime.datetime.strptime(item['end_time'], '%Y-%m-%d %H:%M:%S.%f')

- result[_status].append(dict(id=item['id'], start_time=start_time, end_time=end_time))

+ end_time = datetime.datetime.strptime(

+ item['end_time'], '%Y-%m-%d %H:%M:%S.%f'

+ )

+ result[_status].append(

+ dict(id=item['id'], start_time=start_time, end_time=end_time)

+ )

return result if not spider_status else result[spider_status]

def start_spider(self, project_name, spider_name, arguments):

post_data = dict(project=project_name, spider=spider_name)

post_data.update(arguments)

- data = request("post", self._scrapyd_url() + "/schedule.json", data=post_data, return_type="json")

+ data = request(

+ "post", self._scrapyd_url() + "/schedule.json", data=post_data, return_type="json"

+ )

return data['jobid'] if data and data['status'] == 'ok' else None

def cancel_spider(self, project_name, job_id):

post_data = dict(project=project_name, job=job_id)

- data = request("post", self._scrapyd_url() + "/cancel.json", data=post_data, return_type="json")

- return data != None

+ data = request(

+ "post", self._scrapyd_url() + "/cancel.json", data=post_data, return_type="json"

+ )

+ return data is not None

def deploy(self, project_name, file_path):

with open(file_path, 'rb') as f:

diff --git a/SpiderKeeper/app/proxy/spiderctrl.py b/SpiderKeeper/app/proxy/spiderctrl.py

index de01ea65..412a9499 100644

--- a/SpiderKeeper/app/proxy/spiderctrl.py

+++ b/SpiderKeeper/app/proxy/spiderctrl.py

@@ -1,9 +1,11 @@

import datetime

import random

-from functools import reduce

+import requests

+import re

-from SpiderKeeper.app import db

-from SpiderKeeper.app.spider.model import SpiderStatus, JobExecution, JobInstance, Project, JobPriority

+from SpiderKeeper.app.extensions.sqlalchemy import db

+from SpiderKeeper.app.blueprints.dashboard.model import SpiderStatus, JobExecution, JobInstance, \

+ Project, JobPriority

class SpiderServiceProxy(object):

@@ -69,7 +71,7 @@ def server(self):

return self._server

-class SpiderAgent():

+class SpiderAgent(object):

def __init__(self):

self.spider_service_instances = []

@@ -80,14 +82,15 @@ def regist(self, spider_service_proxy):

def get_project_list(self):

project_list = self.spider_service_instances[0].get_project_list()

Project.load_project(project_list)

- return [project.to_dict() for project in Project.query.all()]

+ return project_list

def delete_project(self, project):

for spider_service_instance in self.spider_service_instances:

spider_service_instance.delete_project(project.project_name)

def get_spider_list(self, project):

- spider_instance_list = self.spider_service_instances[0].get_spider_list(project.project_name)

+ spider_instance_list = self.spider_service_instances[0]\

+ .get_spider_list(project.project_name)

for spider_instance in spider_instance_list:

spider_instance.project_id = project.id

return spider_instance_list

@@ -115,11 +118,19 @@ def sync_job_status(self, project):

job_execution.start_time = job_execution_info['start_time']

job_execution.end_time = job_execution_info['end_time']

job_execution.running_status = SpiderStatus.FINISHED

- # commit

+

+ res = requests.get(self.log_url(job_execution))

+ res.encoding = 'utf8'

+ raw = res.text[-4096:]

+ match = re.findall(job_execution.RAW_STATS_REGEX, raw, re.DOTALL)

+ if match:

+ job_execution.raw_stats = match[0]

+ job_execution.process_raw_stats()

+

db.session.commit()

def start_spider(self, job_instance):

- project = Project.find_project_by_id(job_instance.project_id)

+ project = Project.query.get(job_instance.project_id)

spider_name = job_instance.spider_name

arguments = {}

if job_instance.spider_arguments:

@@ -153,8 +164,8 @@ def start_spider(self, job_instance):

db.session.commit()

def cancel_spider(self, job_execution):

- job_instance = JobInstance.find_job_instance_by_id(job_execution.job_instance_id)

- project = Project.find_project_by_id(job_instance.project_id)

+ job_instance = JobInstance.query.get(job_execution.job_instance_id)

+ project = Project.query.get(job_instance.project_id)

for spider_service_instance in self.spider_service_instances:

if spider_service_instance.server == job_execution.running_on:

if spider_service_instance.cancel_spider(project.project_name, job_execution.service_job_execution_id):

@@ -170,12 +181,14 @@ def deploy(self, project, file_path):

return True

def log_url(self, job_execution):

- job_instance = JobInstance.find_job_instance_by_id(job_execution.job_instance_id)

- project = Project.find_project_by_id(job_instance.project_id)

+ job_instance = JobInstance.query.get(job_execution.job_instance_id)

+ project = Project.query.get(job_instance.project_id)

for spider_service_instance in self.spider_service_instances:

if spider_service_instance.server == job_execution.running_on:

- return spider_service_instance.log_url(project.project_name, job_instance.spider_name,

- job_execution.service_job_execution_id)

+ return spider_service_instance.log_url(

+ project.project_name, job_instance.spider_name,

+ job_execution.service_job_execution_id

+ )

@property

def servers(self):

diff --git a/SpiderKeeper/app/schedulers/common.py b/SpiderKeeper/app/schedulers/common.py

deleted file mode 100644

index 595518a0..00000000

--- a/SpiderKeeper/app/schedulers/common.py

+++ /dev/null

@@ -1,76 +0,0 @@

-import threading

-import time

-

-from SpiderKeeper.app import scheduler, app, agent, db

-from SpiderKeeper.app.spider.model import Project, JobInstance, SpiderInstance

-

-

-def sync_job_execution_status_job():

- '''

- sync job execution running status

- :return:

- '''

- for project in Project.query.all():

- agent.sync_job_status(project)

- app.logger.debug('[sync_job_execution_status]')

-

-

-def sync_spiders():

- '''

- sync spiders

- :return:

- '''

- for project in Project.query.all():

- spider_instance_list = agent.get_spider_list(project)

- SpiderInstance.update_spider_instances(project.id, spider_instance_list)

- app.logger.debug('[sync_spiders]')

-

-

-def run_spider_job(job_instance_id):

- '''

- run spider by scheduler

- :param job_instance:

- :return:

- '''

- try:

- job_instance = JobInstance.find_job_instance_by_id(job_instance_id)

- agent.start_spider(job_instance)

- app.logger.info('[run_spider_job][project:%s][spider_name:%s][job_instance_id:%s]' % (

- job_instance.project_id, job_instance.spider_name, job_instance.id))

- except Exception as e:

- app.logger.error('[run_spider_job] ' + str(e))

-

-

-def reload_runnable_spider_job_execution():

- '''

- add periodic job to scheduler

- :return:

- '''

- running_job_ids = set([job.id for job in scheduler.get_jobs()])

- app.logger.debug('[running_job_ids] %s' % ','.join(running_job_ids))

- available_job_ids = set()

- # add new job to schedule

- for job_instance in JobInstance.query.filter_by(enabled=0, run_type="periodic").all():

- job_id = "spider_job_%s:%s" % (job_instance.id, int(time.mktime(job_instance.date_modified.timetuple())))

- available_job_ids.add(job_id)

- if job_id not in running_job_ids:

- scheduler.add_job(run_spider_job,

- args=(job_instance.id,),

- trigger='cron',

- id=job_id,

- minute=job_instance.cron_minutes,

- hour=job_instance.cron_hour,

- day=job_instance.cron_day_of_month,

- day_of_week=job_instance.cron_day_of_week,

- month=job_instance.cron_month,

- second=0,

- max_instances=999,

- misfire_grace_time=60 * 60,

- coalesce=True)

- app.logger.info('[load_spider_job][project:%s][spider_name:%s][job_instance_id:%s][job_id:%s]' % (

- job_instance.project_id, job_instance.spider_name, job_instance.id, job_id))

- # remove invalid jobs

- for invalid_job_id in filter(lambda job_id: job_id.startswith("spider_job_"),

- running_job_ids.difference(available_job_ids)):

- scheduler.remove_job(invalid_job_id)

- app.logger.info('[drop_spider_job][job_id:%s]' % invalid_job_id)

diff --git a/SpiderKeeper/app/static/css/app.css b/SpiderKeeper/app/static/css/app.css

index 67a42216..549a4798 100644

--- a/SpiderKeeper/app/static/css/app.css

+++ b/SpiderKeeper/app/static/css/app.css

@@ -6,7 +6,6 @@

.txt-args {

font-size: 10px;

- display: block;

white-space: nowrap;

overflow: hidden;

text-overflow: ellipsis;

diff --git a/SpiderKeeper/app/templates/base.html b/SpiderKeeper/app/templates/base.html

index 92b1c1f6..363e70fc 100644

--- a/SpiderKeeper/app/templates/base.html

+++ b/SpiderKeeper/app/templates/base.html

@@ -7,25 +7,38 @@

<!-- Tell the browser to be responsive to screen width -->

<meta content="width=device-width, initial-scale=1, maximum-scale=1, user-scalable=no" name="viewport">

<!-- Bootstrap 3.3.6 -->

- <link rel="stylesheet" href="/static/css/bootstrap.min.css">

+ <link rel="stylesheet" href="{{ url_for('static', filename='css/bootstrap.min.css') }}">

<!-- Font Awesome -->

- <link rel="stylesheet" href="/static/css/font-awesome.min.css">

+ <link rel="stylesheet" href="{{ url_for('static', filename='css/font-awesome.min.css') }}">

<!-- Ionicons -->

- <link rel="stylesheet" href="/static/css/ionicons.min.css">

+ <link rel="stylesheet" href="{{ url_for('static', filename='css/ionicons.min.css') }}">

<!-- Theme style -->

- <link rel="stylesheet" href="/static/css/AdminLTE.min.css">

+ <link rel="stylesheet" href="{{ url_for('static', filename='css/AdminLTE.min.css') }}">

<!-- AdminLTE Skins. Choose a skin from the css/skins

folder instead of downloading all of them to reduce the load. -->

- <link rel="stylesheet" href="/static/css/skins/skin-black-light.min.css">

+ <link rel="stylesheet" href="{{ url_for('static', filename='css/skins/skin-black-light.min.css') }}">

<!--custom css-->

- <link rel="stylesheet" href="/static/css/app.css">

+ <link rel="stylesheet" href="{{ url_for('static', filename='css/app.css') }}">

<!-- HTML5 Shim and Respond.js IE8 support of HTML5 elements and media queries -->

<!-- WARNING: Respond.js doesn't work if you view the page via file:// -->

<!--[if lt IE 9]>

- <script src="/static/js/html5shiv.min.js"></script>

- <script src="/static/js/respond.min.js"></script>

+ <script src="{{ url_for('static', filename='js/html5shiv.min.js') }}"></script>

+ <script src="{{ url_for('static', filename='js/respond.min.js') }}"></script>

<![endif]-->

+

+ <!-- jQuery 2.2.3 -->

+ <script src="{{ url_for('static', filename='js/jquery-2.2.3.min.js') }}"></script>

+ <!-- Bootstrap 3.3.6 -->

+ <script src="{{ url_for('static', filename='js/bootstrap.min.js') }}"></script>

+ <!-- SlimScroll -->

+ <script src="{{ url_for('static', filename='js/jquery.slimscroll.min.js') }}"></script>

+ <!-- FastClick -->

+ <script src="{{ url_for('static', filename='js/fastclick.min.js') }}"></script>

+ <!-- AdminLTE App -->

+ <script src="{{ url_for('static', filename='js/AdminLTE.min.js') }}"></script>

+ <!-- AdminLTE for demo purposes -->

+ <script src="{{ url_for('static', filename='js/demo.js') }}"></script>

</head>

<body class="hold-transition skin-black-light sidebar-mini">

<!-- Site wrapper -->

@@ -58,11 +71,8 @@

</a>

<ul class="dropdown-menu">

{% for project in project_list %}

- <li><a href="/project/{{ project.id }}">{{ project.project_name }}</a></li>

+ <li><a href="/project/{{ project.id }}">{{ project.project_name }}</a></li>

{% endfor %}

- <li role="separator" class="divider"></li>

- <li><a href="#" data-toggle="modal" data-target="#project-create-modal">Create Project</a>

- </li>

</ul>

</li>

</ul>

@@ -73,6 +83,7 @@

<!-- =============================================== -->

<!-- Left side column. contains the sidebar -->

+ {% block main_sidebar %}

<aside class="main-sidebar">

<!-- sidebar: style can be found in sidebar.less -->

<section class="sidebar">

@@ -84,13 +95,13 @@

</div>

<ul class="sidebar-menu">

<li class="header">JOBS</li>

- <li><a href="/project/{{ project.id }}/job/dashboard"><i

+ <li><a href="{{ url_for('dashboard.job_dashboard', project_id=project.id) }}"><i

class="fa fa-dashboard text-blue"></i>

<span>Dashboard</span></a></li>

- <li><a href="/project/{{ project.id }}/job/periodic"><i class="fa fa-tasks text-green"></i>

+ <li><a href="{{ url_for('dashboard.job_periodic', project_id=project.id) }}"><i class="fa fa-tasks text-green"></i>

<span>Periodic Jobs</span></a></li>

<li class="header">SPIDERS</li>

- <li><a href="/project/{{ project.id }}/spider/dashboard"><i class="fa fa-flask text-red"></i>

+ <li><a href="{{ url_for('dashboard.spider_dashboard', project_id=project.id) }}"><i class="fa fa-flask text-red"></i>

<span>Dashboard</span></a>

</li>

<li><a href="/project/{{ project.id }}/spider/deploy"><i class="fa fa-server text-orange"></i>

@@ -100,7 +111,7 @@

<li><a href="/project/{{ project.id }}/project/stats"><i class="fa fa-area-chart text-gray"></i> <span>Running Stats</span></a>

</li>

<!--<li><a href="#"><i class="fa fa-circle-o text-red"></i> <span>Members</span></a></li>-->

- <li><a href="/project/manage"><i class="fa fa-gears text-red"></i> <span>Manage</span></a></li>

+ <li><a href="{{ url_for('dashboard.project_manage', project_id=project.id) }}"><i class="fa fa-gears text-red"></i> <span>Manage</span></a></li>

<li class="header">SERVER</li>

<li><a href="/project/{{ project.id }}/server/stats"><i class="fa fa-bolt text-red"></i> <span>Usage Stats</span></a>

</li>

@@ -108,6 +119,7 @@

</section>

<!-- /.sidebar -->

</aside>

+ {% endblock %}

<!-- =============================================== -->

@@ -128,53 +140,15 @@

<footer class="main-footer">

<div class="pull-right hidden-xs">

- <b>Version</b> 1.2.0

+ <b>Version</b> {{ sk_version }}

</div>

- <strong><a href="https://github.com/DormyMo/SpiderKeeper">SpiderKeeper</a>.</strong>

+ <strong><a href="https://github.com/kalombos/SpiderKeeper/">SpiderKeeper</a>.</strong>

</footer>

-

- <div class="modal fade" role="dialog" id="project-create-modal">

- <div class="modal-dialog" role="document">

- <div class="modal-content">

- <form action="/project/create" method="post">

- <div class="modal-header">

- <button type="button" class="close" data-dismiss="modal" aria-label="Close">

- <span aria-hidden="true">×</span></button>

- <h4 class="modal-title">Create Project</h4>

- </div>

- <div class="modal-body">

- <div class="form-group">

- <label for="project-name">Project Name</label>

- <input type="text" name="project_name" id="project-name" class="form-control"

- placeholder="Project Name">

- </div>

- </div>

- <div class="modal-footer">

- <button type="button" class="btn btn-default pull-left" data-dismiss="modal">Close</button>

- <button type="submit" class="btn btn-primary">Create</button>

- </div>

- </form>

- </div>

- <!-- /.modal-content -->

- </div>

- <!-- /.modal-dialog -->

- </div>

<!-- /.modal -->

</div>

<!-- ./wrapper -->

-<!-- jQuery 2.2.3 -->

-<script src="/static/js/jquery-2.2.3.min.js"></script>

-<!-- Bootstrap 3.3.6 -->

-<script src="/static/js/bootstrap.min.js"></script>

-<!-- SlimScroll -->

-<script src="/static/js/jquery.slimscroll.min.js"></script>

-<!-- FastClick -->

-<script src="/static/js/fastclick.min.js"></script>

-<!-- AdminLTE App -->

-<script src="/static/js/AdminLTE.min.js"></script>

-<!-- AdminLTE for demo purposes -->

-<script src="/static/js/demo.js"></script>

+

{% block script %}{% endblock %}

</body>

</html>

\ No newline at end of file

diff --git a/SpiderKeeper/app/templates/index.html b/SpiderKeeper/app/templates/index.html

new file mode 100644

index 00000000..ea49515a

--- /dev/null

+++ b/SpiderKeeper/app/templates/index.html

@@ -0,0 +1,12 @@

+{% extends "base.html" %}

+{% block main_sidebar %}

+{% endblock %}

+{% block content_header %}

+ <div class="callout callout-warning">

+ <ul>

+ <li><h4>No one project on scrapyd found. Please upload it and refresh the page after a while</h4></li>

+ </ul>

+</div>

+{% endblock %}

+{% block content_body %}

+{% endblock %}

\ No newline at end of file

diff --git a/SpiderKeeper/app/templates/job_dashboard.html b/SpiderKeeper/app/templates/job_dashboard.html

index 494b93d7..5caa81bd 100644

--- a/SpiderKeeper/app/templates/job_dashboard.html

+++ b/SpiderKeeper/app/templates/job_dashboard.html

@@ -10,7 +10,7 @@ <h1>Job Dashboard</h1>

top: 15px;

right: 10px;">

<button type="button" class="btn btn-success btn-flat" style="margin-top: -10px;" data-toggle="modal"

- data-target="#job-run-modal">RunOnce

+ data-target="#job-run-modal">Run Once

</button>

</ol>

{% endblock %}

@@ -38,7 +38,7 @@ <h3 class="box-title">Next Jobs</h3>

{% if job.job_instance %}

<tr>

<td>{{ job.job_execution_id }}</td>

- <td><a href="/project/1/job/periodic#{{ job.job_instance_id }}">{{ job.job_instance_id }}</a></td>

+ <td><a href="{{ url_for('dashboard.job_periodic', project_id=job.project_id) }}#{{ job.job_instance_id }}">{{ job.job_instance_id }}</a></td>

<td>{{ job.job_instance.spider_name }}</td>

<td class="txt-args" data-toggle="tooltip" data-placement="right"

title="{{ job.job_instance.spider_arguments }}">{{ job.job_instance.spider_arguments }}

@@ -95,7 +95,7 @@ <h3 class="box-title">Running Jobs</h3>

{% if job.job_instance %}

<tr>

<td>{{ job.job_execution_id }}</td>

- <td><a href="/project/1/job/periodic#{{ job.job_instance_id }}">{{ job.job_instance_id }}</a></td>

+ <td><a href="{{ url_for('dashboard.job_periodic', project_id=job.project_id) }}#{{ job.job_instance_id }}">{{ job.job_instance_id }}</a></td>

<td>{{ job.job_instance.spider_name }}</td>

<td class="txt-args" data-toggle="tooltip" data-placement="right"

title="{{ job.job_instance.spider_arguments }}">{{ job.job_instance.spider_arguments }}

@@ -119,7 +119,7 @@ <h3 class="box-title">Running Jobs</h3>

{% endif %}

<td>{{ timedelta(now,job.start_time) }}</td>

<td>{{ job.start_time }}</td>

- <td><a href="/project/{{ project.id }}/jobexecs/{{ job.job_execution_id }}/log" target="_blank"

+ <td><a href="{{ url_for('dashboard.job_log', job_exec_id=job.job_execution_id) }}" target="_blank"

data-toggle="tooltip" data-placement="top" title="{{ job.service_job_execution_id }}">Log</a>

</td>

<td style="font-size: 10px;">{{ job.running_on }}</td>

@@ -153,14 +153,17 @@ <h3 class="box-title">Completed Jobs</h3>

<th style="width: 20px">Priority</th>

<th style="width: 40px">Runtime</th>

<th style="width: 120px">Started</th>

+ <th style="width: 10px">Items</th>

+ <th style="width: 10px">Warnings</th>

+ <th style="width: 10px">Errors</th>

<th style="width: 10px">Log</th>

<th style="width: 10px">Status</th>

</tr>

{% for job in job_status.COMPLETED %}

{% if job.job_instance %}

- <tr>

+ <tr class="{% if job.has_errors %}danger{% elif job.has_warnings %}warning{% endif %}">

<td>{{ job.job_execution_id }}</td>

- <td><a href="/project/1/job/periodic#{{ job.job_instance_id }}">{{ job.job_instance_id }}</a></td>

+ <td><a href="{{ url_for('dashboard.job_periodic', project_id=job.project_id) }}#{{ job.job_instance_id }}">{{ job.job_instance_id }}</a></td>

<td>{{ job.job_instance.spider_name }}</td>

<td class="txt-args" data-toggle="tooltip" data-placement="right"

title="{{ job.job_instance.spider_arguments }}">{{ job.job_instance.spider_arguments }}

@@ -184,17 +187,20 @@ <h3 class="box-title">Completed Jobs</h3>

{% endif %}

<td>{{ timedelta(job.end_time,job.start_time) }}</td>

<td>{{ job.start_time }}</td>

- <td><a href="/project/{{ project.id }}/jobexecs/{{ job.job_execution_id }}/log" target="_blank"

+ <td>{{ job.items_count }}</td>

+ <td>{{ job.warnings_count }}</td>

+ <td>{{ job.errors_count }}</td>

+ <td><a href="{{ url_for('dashboard.job_log', job_exec_id=job.job_execution_id) }}" target="_blank"

data-toggle="tooltip" data-placement="top" title="{{ job.service_job_execution_id }}">Log</a>

</td>

{% if job.running_status == 2 %}

- <td>

- <span class="label label-success">FINISHED</span>

- </td>

- {% else %}

- <td>

- <span class="label label-danger">CANCELED</span>

- </td>

+ <td>

+ <span class="label label-success">FINISHED</span>

+ </td>

+ {% else %}

+ <td>

+ <span class="label label-danger">CANCELED</span>

+ </td>

{% endif %}

</tr>

{% endif %}

diff --git a/SpiderKeeper/app/templates/job_log.html b/SpiderKeeper/app/templates/job_log.html

deleted file mode 100644

index a130775b..00000000

--- a/SpiderKeeper/app/templates/job_log.html

+++ /dev/null

@@ -1,16 +0,0 @@

-<html>

-<meta charset="utf-8">

-<style>

- .p-log {

- font-size: 12px;

- line-height: 1.5em;

- color: #1f0909;

- text-align: left

- }

-</style>

-<body style="background-color:#F3F2EE;">

-{% for line in log_lines %}

-<p class="p-log">{{ line }}</p>

-{% endfor %}

-</body>

-</html>

\ No newline at end of file

diff --git a/SpiderKeeper/app/templates/job_periodic.html b/SpiderKeeper/app/templates/job_periodic.html

index beeb9b94..1b7ba2f6 100644

--- a/SpiderKeeper/app/templates/job_periodic.html

+++ b/SpiderKeeper/app/templates/job_periodic.html

@@ -12,7 +12,10 @@ <h1>Periodic jobs</h1>

<button type="button" class="btn btn-success btn-flat" style="margin-top: -10px;" data-toggle="modal"

data-target="#job-run-modal">Add Job

</button>

+ <a type="button" href="{{ url_for('dashboard.jobs_remove', project_id=project.id) }}" class="btn btn-danger btn-flat" style="margin-top: -10px;">Remove All

+ </a>

</ol>

+

{% endblock %}

{% block content_body %}

<div class="box">

@@ -77,10 +80,10 @@ <h3 class="box-title">Periodic jobs (Spiders)</h3>

</td>

{% endif %}

<td>

- <a href="/project/{{ project.id }}/job/{{ job_instance.job_instance_id }}/run"><span

+ <a href="{{ url_for('dashboard.job_run', job_instance_id=job_instance.job_instance_id) }}"><span

class="label label-info">Run</span></a>

- <a href="/project/{{ project.id }}/job/{{ job_instance.job_instance_id }}/remove"><span

- class="label label-danger">Remove</span></a>

+ <a href="{{ url_for('dashboard.job_remove', job_instance_id=job_instance.job_instance_id) }}">

+ <span class="label label-danger">Remove</span></a>

</td>

</tr>

{% endfor %}

diff --git a/SpiderKeeper/app/templates/project_manage.html b/SpiderKeeper/app/templates/project_manage.html

index a21dfc01..4630c392 100644

--- a/SpiderKeeper/app/templates/project_manage.html

+++ b/SpiderKeeper/app/templates/project_manage.html

@@ -24,11 +24,7 @@ <h3 class="box-title">{{ project.project_name }}</h3>

</div>

<div class="box-footer text-black">

{% if project %}

- <a href="/project/{{ project.id }}/delete" class="btn btn-block btn-danger btn-flat">Delete Project</a>

- {% else %}

- <a class="btn btn-block btn-info btn-flat" data-toggle="modal"

- data-target="#project-create-modal">Create Project

- </a>

+ <a href="/project/{{ project.id }}/delete" class="btn btn-block btn-danger btn-flat">Delete Project</a>

{% endif %}

</div>

</div>

diff --git a/SpiderKeeper/app/templates/project_stats.html b/SpiderKeeper/app/templates/project_stats.html

index 6df9831a..0d596b02 100644

--- a/SpiderKeeper/app/templates/project_stats.html

+++ b/SpiderKeeper/app/templates/project_stats.html

@@ -18,7 +18,7 @@ <h3 class="box-title">Spider Running Stats (last 24 hours)</h3>

</div>

{% endblock %}

{% block script %}

-<script src="/static/js/Chart.min.js"></script>

+<script src="{{ url_for('static', filename='js/Chart.min.js') }}"></script>

<script>

//-------------

//- BAR CHART -

diff --git a/SpiderKeeper/app/templates/spider_dashboard.html b/SpiderKeeper/app/templates/spider_dashboard.html

index 5092edd2..a0068d46 100644

--- a/SpiderKeeper/app/templates/spider_dashboard.html

+++ b/SpiderKeeper/app/templates/spider_dashboard.html

@@ -15,12 +15,12 @@ <h3 class="box-title">Periodic jobs (Spiders)</h3>

<th style="width: 50px">Last Runtime</th>

<th style="width: 50px">Avg Runtime</th>

</tr>

- {% for spider_instance in spider_instance_list %}

+ {% for spider in spiders %}

<tr>

- <td>{{ spider_instance.spider_instance_id }}</td>

- <td>{{ spider_instance.spider_name }}</td>

- <td>{{ spider_instance.spider_last_runtime }}</td>

- <td>{{ readable_time(spider_instance.spider_avg_runtime) }}</td>

+ <td>{{ spider.id }}</td>

+ <td>{{ spider.spider_name }}</td>

+ <td>{{ spider.last_runtime if spider.last_runtime else '-' }}</td>

+ <td>{{ readable_time(spider.avg_runtime) }}</td>

</tr>

{% endfor %}

</table>

diff --git a/SpiderKeeper/app/util/__init__.py b/SpiderKeeper/app/util/__init__.py

index 8dd75172..f8d0d14d 100644

--- a/SpiderKeeper/app/util/__init__.py

+++ b/SpiderKeeper/app/util/__init__.py

@@ -1,4 +1,5 @@

def project_path():

- import inspect, os

+ import os

+ import inspect

this_file = inspect.getfile(inspect.currentframe())

- return os.path.abspath(os.path.dirname(this_file)+'/../')

\ No newline at end of file

+ return os.path.abspath(os.path.dirname(this_file)+'/../')

diff --git a/SpiderKeeper/app/util/http.py b/SpiderKeeper/app/util/http.py

index ecc8f441..c0e81386 100644

--- a/SpiderKeeper/app/util/http.py

+++ b/SpiderKeeper/app/util/http.py

@@ -47,8 +47,10 @@ def request(request_type, url, data=None, retry_times=5, return_type="text"):

res = request_get(url, retry_times)

if request_type == 'post':

res = request_post(url, data, retry_times)

- if not res: return res

- if return_type == 'text': return res.text

+ if not res:

+ return res

+ if return_type == 'text':

+ return res.text

if return_type == 'json':

try: